The DGX Station A100 is an AI workstation announced by NVIDIA. In the tower case, four Ampere-based Nvidia A100 Tensor Core GPUs are plugged in to provide data center-class performance even without a data center. Researchers can use it at home or office by simply plugging the power cord into an outlet.

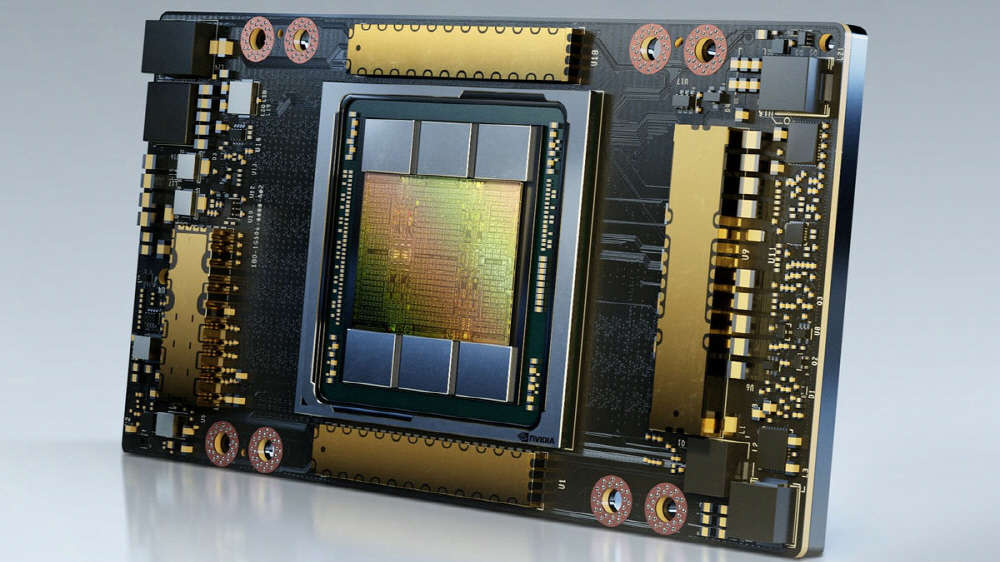

In addition to the version with 40GB of memory for graphics, NVIDIA A100, which is equipped with GPU, is also preparing a new 80GB version, and can handle up to 320GB through the 3rd generation NVLink. Other key specifications include a 64-core AMD Epic (EPYC) CPU with 512GB of RAM, 1.92TB of NVMe SSD for operating system, and 7.68TB of NVMe SSD for data cache storage. It has 4 mini display ports for video output and all support 4K video output. It also comes with wired LAN terminals such as 2 10GbE and 1 1GbE for management.

The DGX Station A100 is a workgroup server supporting NVIDIA Multi-Instance GPU (MIG) technology that provides parallel processing and 28 instances of GPUs that can be accessed by multiple users. Nvidia has already revealed that BMW and Lockheed Martin are using DGX stations. In addition, the SXM4 form factor DGX A100 also offers an 80GB GPU as an additional option. In addition, it also announced a scalable HGX AI supercomputing platform for the DGX A100 640GB system server that responds to vast AI workloads.

It is not used for general purposes such as PC games, but it is expected that this product will provide an excellent working environment for AI researchers. Related information can be found here .