During the GTC 2021, an online technology conference held on April 13, NVIDIA announced DRIVE Atlan, a next-generation AI-responsive SoC for autonomous vehicles with 1,000 TOPS performance. Drive Atlan has 33 times the computational power of SoCs for autonomous vehicles currently in practical use, and Nvidia aims to put them into practice in 2025.

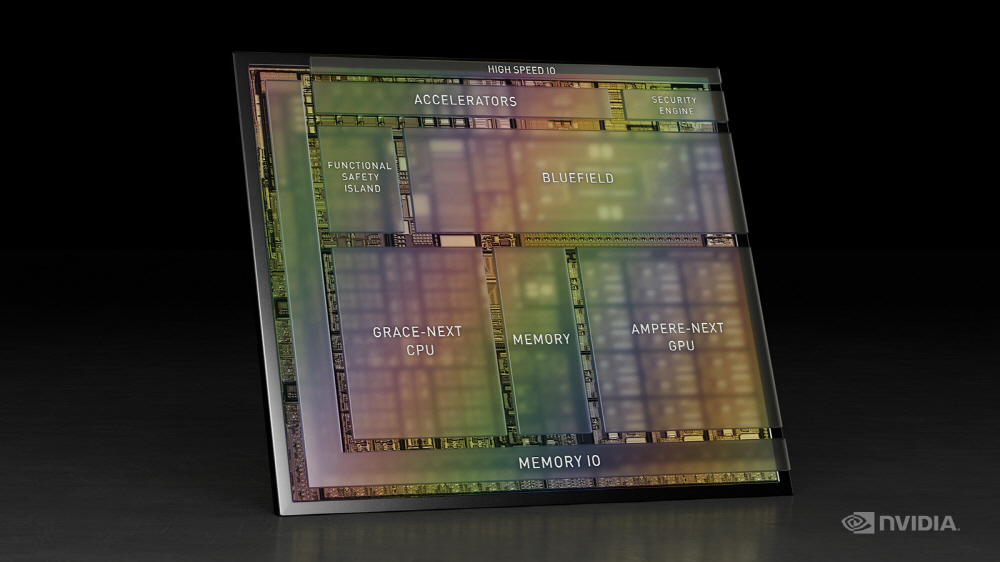

Drive Atlan is a SoC specialized exclusively for autonomous vehicles.Bluefield, a DPU that supports the complex computing and AI workloads required for autonomous vehicle autonomous driving in addition to NVIDIA next-generation GPU architecture, ARM-based CPU core, deep learning and computer vision accelerators. (BlueField) is being installed for the first time as an NVIDIA SoC.

The DRIVE Atlan computing power is 1,000 TOPS compared to 30 TOPS of DRIVE AGX Xavier and 254 TOPS of DRIVE AGX Orin, which are currently installed in mass-produced cars and trucks. Nvidia describes Drive Atlan as an in-vehicle data center that provides computing capabilities to build software-driven find vehicles.

However, Drive Atlan is still under development and is aiming to be installed from the 2025 model. DRIVE Atlan was designed from scratch, but it can be developed through the CUDA environment and TensorRT APIs and libraries, just like DRIVE AGX Xavier and DRIVE AGX Orin.

In addition, NVIDIA announced the 8th generation of autonomous driving platform Hyperion, which realizes Level 4 autonomous driving by combining Drive AGX left and 12 external cameras, 3 internal cameras, 9 radars, and 1 lidar sensor.

In addition to monitoring the surroundings for autonomous driving, the 8th generation Hyperion handles all of the vehicle speed display and UI such as air conditioners, multimedia functions that play music or images, and recognition of human faces in the vehicle with the Dranive AGX Orin control system. According to NVIDIA, the 8th generation Hyperion is expected to be released in the second half of 2021, and in the keynote, a Mercedes-Benz concept car CG model, which was partnered with NVIDIA in June 2020, was used in the presentation.

Nvidia also announced that it will expand cooperation with Swedish automaker Volvo and will equip future Volvo cars with DRIVE AGX Orin. First, the DRIVE AGX Orin will be installed on the XC9, which is scheduled to be announced in 2022, and by combining it with a softwenger, steering and brake backup system developed by Volvo subsidiary Zenseact, it realizes Level 4 autonomous driving capable of fully autonomous driving under certain conditions. Is to do it.

Nvidia CEO Jensen Huang said that the transportation industry has been in need of a computing platform that can be relied on for decades. Investments in software are expensive, and it is impossible to repeat investments in a single vehicle. It also explained that NVIDIA DRIVE is an advanced AI and AV computing platform capable of maintaining multi-generation architecture compatibility with a comprehensive software and developer ecosystem. He emphasized that the company’s roadmap is truly a tremendous technology and will be able to create a safe and autonomous vehicle by fusion of NVIDIA’s strengths: AI, cars, robots, safety and secure data centers by Bluefield. Related information can be found here.

Meanwhile, Nvidia announced that it has begun offering Jarvis, a software framework that makes it possible to build interactive AI. Jarvis is trained by vast amounts of data, and developers will be able to develop interactive AI agents with high-precision automatic speech recognition and language comprehension capabilities.

Jarvis runs new language-based apps that could not be realized until now, and monitors patients 24 hours a day to ease the burden on medical workers, cross-border cooperation with digital nurses, and a variety of service possibilities, including real-time translation that enables live content viewing in their native language. Is open.

Jarvis is built as a model trained on over 1 billion pages of text and 60,000 hours of audio data in different languages, pronunciation, environments and jargon. It also leverages GPU acceleration to run an end-to-end audio pipeline in less than 100 milliseconds and processes listening, comprehension, and response generation at high speed. Voice pipelines can respond instantly to millions of users deployed in the cloud and data centers.

Developers can easily respond to models by training, adapting, and optimizing all tasks using a framework called TAO, and through the process, they do not need advanced AI expertise. NVIDIA CEO Jensen Huang said that excellent cloud services are being created through breakthrough deep learning such as speech recognition, language understanding, and speech synthesis, but Jarvis will provide AI services wherever users can use such advanced interactive AI outside the cloud. He said he would do it. Related information can be found here.

During the event, NVIDIA also announced Morpheus, an open AI framework for real-time detection and protection of cyber attacks. Morpheus is a cyber security framework optimized for the cloud. Using AI, it identifies and protects threats or abnormalities such as unencrypted data leakage and phishing attempts, malware infringement profiles, server errors or failures. Morpheus also has pre-trained AI models, but developers can use AI to integrate their own models.

Nvidia explains that the use of a Bluetooth data processing unit (DPU), a chip for data centers, together with Morphengus, acts as a cyber defense sensor that detects threats from all computational nodes in the data center network. Thus, organizations say that users can protect from the data center core to the edge so that all packets can be quickly analyzed without duplicating data.

Nvidia points out that existing AI security tools are threat detection algorithms based on an incomplete model that typically samples about 5% of network traffic data and examines it. He then emphasized that Morpheus can analyze all packets. Morpheus can analyze more data than traditional cybersecurity frameworks without sacrificing cost and performance.

CEO Jensen Huang emphasized that the zero trust security model requires real-time monitoring of all transactions in the data center. This is an important technical challenge because it is necessary to immediately detect server intrusions or threats and operate the latest data center data.

Currently, a major hardware or software cybersecurity solution provider is working with NVIDIA, optimizing data center security products and integrating with the Morpheus framework. Partners include ARIA Cybersecurity Solutions, Cloudflare, F5, Fortinet, Guardicore, Canonical, Red Hat, and VMware. Related information can be found here.

Nvidia also announced next-generation laptops, desktops, and servers based on Ampere architecture during the event.

The GPUs announced this time are the Amvidia RTX A5000 and A4000 desktops. For laptops, the RTX A5000 laptop GPU, A4000, A3000, A2000. There are 8 types of data centers, including NVIDIA A16 GPU and NVIDIA A10 GPU.

All of these products feature an amperage architecture and deliver up to twice the throughput of the previous generation, enabling up to 10 times scarcity at 2 times the throughput of the previous generation or 2 times the second generation RT core capable of running ray tracing, shading, and noise reduction tasks simultaneously It provides a third-generation Tensor Core that supports the TF32·BFloat16 data format, and a CUDA core that significantly boosts graphics and computing workloads with 2.5 times the FP32 throughput of the previous generation.

For desktop GPUs, the RTX A5000 is equipped with 24GB of GDDR6 memory and the A4000 is equipped with 16GB of GDDR6 memory. It supports vWS software and allows remote users to run workloads such as advanced design and AI computing. PCIe is the fourth generation, providing twice the bandwidth of the previous generation.

For notebooks, the GPU memory is up to 16GB with the 3rd generation Max-Q technology that achieves efficiency. The RTX A5000 laptop GPU has 6,144 CUDA cores, 192 tensor cores, 48 RT cores, and consumes 80-165W of power. The A4000 has 5,120 CUDA cores, 160 Tensor cores, and 40 RT cores. The A3000 comes with 4,095 CUDA cores, 128 Tensor cores and 32 RT cores, while the A2000 comes with 2,560 CUDA cores, 80 Tensor cores and 20 RT cores. The power consumption is 35~95W.

It also introduced the NVIDIA T1200 laptop GPU and NVIDIA T600 based on the previous generation Turing architecture for notebooks. These two are designed for professional work with multiple applications.

Data center use is specific to designers and engineers, and the A16 realizes a virtual desktop infrastructure environment that achieves up to twice the user density while reducing the total cost of the previous generation. The A10 delivers up to 2.5 times the virtual workstation performance of the previous generation. These A16s and A10s combine NVIDIA RTX Virtual Workstation and NVIDIA Virtual PC software to improve performance and memory speed, and to streamline all workflows, including graphics, AI, and VDI, users spend a lot of time on efficient and productive work. It is an explanation that it can be done.