When it comes to deep fakes, what I immediately think of is a composite image in which AI replaces a person’s photo or video with another person’s face and body. For example, they play a joke by changing the face of a popular movie star to another actor. However, it is said that a deep fake image that turns such a landscape image into an image that does not exist can be a problem.

Geographers, for example, are concerned about deep fakes of photographs taken on the ground from satellites. There is a possibility that the reliability of the entire satellite image will be compromised if it is processed or spread as if it is a large-scale assembly or demonstration, how it looks like a flood or wildfire has caused enormous damage anywhere in the world. In the past, satellite images have been analyzed and reports of Uyghur camps have been reported, but if the person concerned tells the government that they are fake, it can be difficult to treat them as evidence.

In 2019, the U.S. military is pointing to the possibility that just this will happen. For example, after geographic planning software for making an advance plan has been replaced with fake image data, major problems can arise, such as a sortie for the bridge, which is an important point of the operation, but becomes a trap instead of a bridge.

To solve this problem, a research team at the University of Washington, Professor of Geography (Bo Zhao), recently published a paper on the subject of Deep fake geography. The study documents experiments conducted to generate or retrieve fake versions of geographic satellite images.

For example, a map created by a cartographer is copied by others to prevent profits from being blocked. Instead of a watermark, a hidden village is put on the map. In addition, new technologies also create new challenges. He explains that the satellite images generated by deepfakes are sophisticated and only truly visible to amateur eyes. Satellite images are usually produced by stable departments such as government agencies or experts, which is also a factor that is recognized as highly reliable.

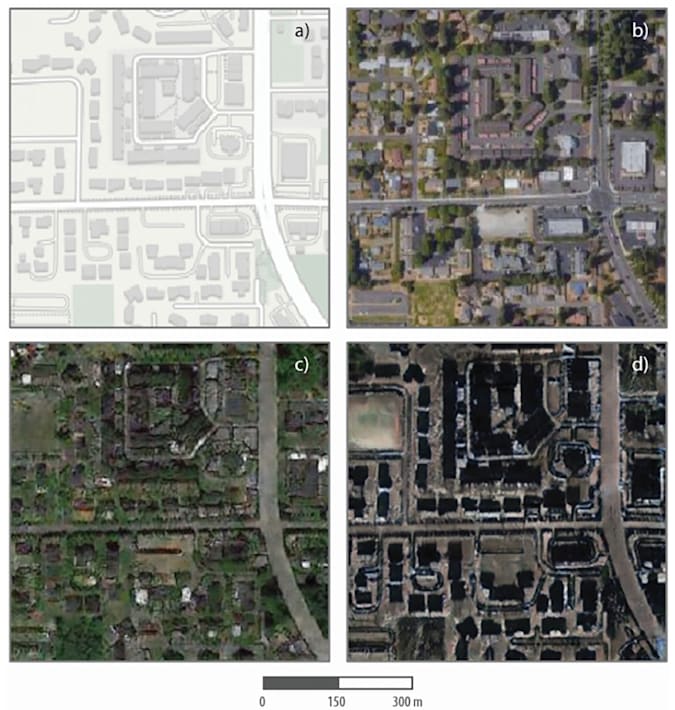

In their research, the research team developed a software that generates fake satellite images using an AI technique called a hostile generation network. In addition, the learned image characteristics were injected into various base maps to create geographic deepfakes. In the experiment, Tacoma Road and buildings in Washington state were used to overlap the skyscrapers in Beijing or on the streets of Seattle to create color. The result of creation is not perfect, but if you look at it without any explanation, many people might think that there really is a place like this.

It didn’t take long for the deep fake technology to change human faces to leak out of the lab and be exploited. It may be a matter of time before the satellite deepfake image is started to be used as a joke somewhere. Related information can be found here.