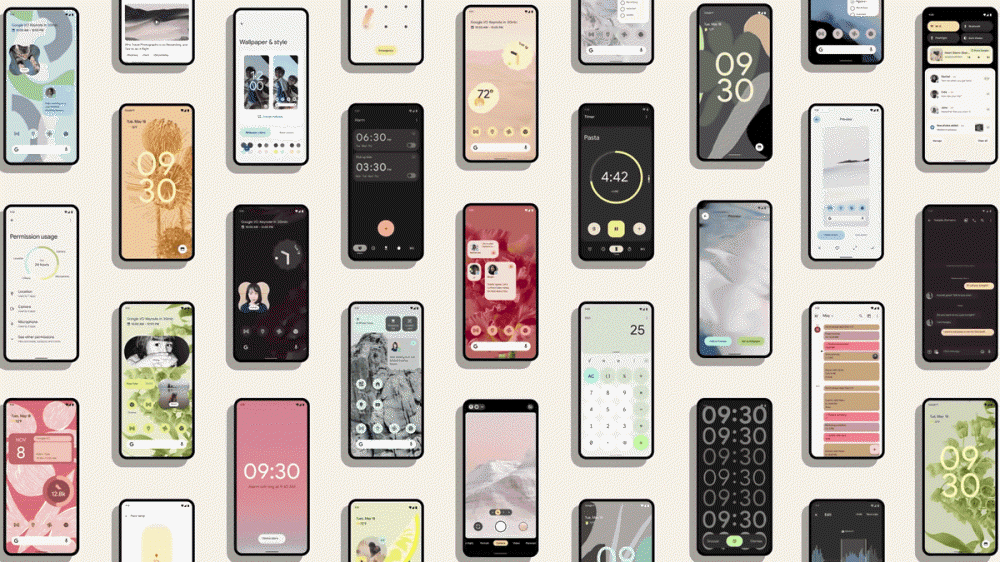

Google announced the public beta version of Android 12, the next major version of Android, at the 2021 annual developer conference, Google I/O 2021. Google describes Android 12 as the most ambitious version, and says it is the largest design change in Android history for a massive UI overhaul.

The announcement of the beta version of Android 12 is according to the schedule announced at the time of the release of the developer preview version. According to this plan, the 2nd and 3rd beta versions will be released around June to July, and the final beta version will be released in August. A large-scale UI reorganization called Material UI that attracts attention in the beta version announced this time. The biggest feature is a custom function called Color Extraction, which automatically reflects the color automatically extracted from the screen set by the user to the entire system.

Next is the widget redesign. Widgets are now more convenient and easier to find with the overall design reorganization. The overall appearance is changed so that the corners of each widget are rounded and automatically padded to fit the home screen. The widget itself also reflects the system color, making it possible to color the entire device with a consistent color. It is also possible to place confirmation switches and radio buttons on new widgets.

Responsive Layouts, which enable automatic scaling of widgets, allow widgets to be optimized not only for regular smartphones, but also for foldable smartphones and tablets.

In addition, by adding a new effect called Stretch Over Scroll to the entire system, you can see that the end of the content has been reached when scrolling. In addition, the audio UI as well as the visual has been reorganized so that the audio of each application does not overlap when switching between applications that play audio.

In Android 12 beta, it succeeded in reducing the CPU time required for core system services by 22.1%, and achieved improved processing speed and responsiveness. In addition, the system server reduced core usage by 15.4%, improving power efficiency and usage time. In addition, it also achieved faster app loading time by reducing locks or delays. For example, the launch of Google Photos is said to be 36% faster.

The following is standardized on Android devices. In cooperation with Android ecosystem partners, we set a standard called performance class for device functions. The performance class spans a wide range of camera start and delay, codec availability, encoding quality, minimum memory size, screen resolution, read and write performance, and so on. I can confirm.

In addition, after the release of the automatic permission reset function announced in 2020, it has implemented the hibernation mode function by automatically determining the apps that have been used for a long time, which has received favorable reviews, such as 8.5 million units in two weeks. Setting hibernation not only invalidates all permissions, but also terminates apps forcibly, freeing temporary resources such as memory and storage. The new auto-stop function can save device storage performance and improve safety.

In the past, it was necessary to pair with a Bluetooth device, but location information permission was required even in apps that do not use device location information. In Android 12 beta, it is possible to grant permission to search nearby Bluetooth devices on the application side without granting location information permission.

Next, Android previously provided alternatives such as allowing location information only while using the app or this time only. The Androded 12 beta has come up with an alternative that allows for coarse location, which allows at least coarse location information needed for new applications. Google says it’s better to ask developers for approximate location information when using general location information.

The newly announced Android 12 beta version can be used not only for developers but also for early adopters, not only for Google’s smartphone Pixel, but also for smartphones such as Asus and Oppo. For users who want to use the Android 12 beta, Google says it expects more users to try the app in the Android 12 beta and report problems. Related information can be found here.

◇ API added to AR Core= At this event, Google announced that it will introduce two new APIs to ARCore, an AR development kit developed and provided by its company. In addition to the newly introduced API, it is possible to create a depth map in more detail than before, and AR can be used in recorded videos.

AR Core, launched in 2018, has been installed more than 1 billion times, and currently more than 850 million Android devices run AR Core to access AR experiences. Google announced the latest version, AR Core 1.24, and announced that it will introduce Raw Depth API and Recording and Playback API.

In 2020, Google launched the Depth API, which creates depth maps with a single camera, allowing you to create depth maps and enhance AR experiences on Android devices. The newly announced RODAPS API is built on the basis of the existing DApps API. It creates a depth map to enable detailed AR expression. This depth map makes it possible to get a realistic depth map by adding data points using a corresponding image that provides a confidence value for the pixel depth estimate. By recognizing the relationship between object depth and position, a more accurate AR experience becomes possible. This allows apps to accurately measure spatial distances.

Since the RodApps API does not require a special depth sensor such as a ToF sensor, it can be used on most Android devices already using AR core. Above all, it explains that the quality of experience is higher than when the ToF sensor is installed.

Next, one of the issues that plague AR app developers is the fact that they actually have to go to that place when testing app behavior in a specific environment. Of course, developers don’t always have access to the location they want, and there are cases where the lighting conditions change over time, the sensor retrieves information from each test session, and so on.

The recording and playback API solves the problem of AR developers by enabling recording of the IMU (Inertial Measeurement Unit) and depth sensor data along with the location video. Using this API, you can enjoy AR apps on the park stage even if you are in the house based on the videos you have taken. The benefits of this API are not only attractive to users, but also to AR app developers. DiDi, a Chinese company, used the recording and playback API to develop a navigation function using AR in the DiDi-Rider app. It is said that if there is a filmed video, it tests AR without having to go to the site several times. Related information can be found here.

◇ Google Maps with 5 New Features = Google next announced a new feature on Google Maps. First, search for a lot that reduces sudden car brakes. Whenever searching for a route in Jidong, it considers the number of road lanes and curves and displays several routes to the destination. If the arrival time is the same or at least is different, it is selected as a recommended route that reduces the number of sudden braking.

Next is the detailed live view. If you choose GPS, you can tap the Map Live button to directly access the augmented reality live view, and check new road signs for information such as congestion situations and photos. If you’re traveling, you’ll also know how far the selected store is from the hotel you’re staying at.

Next is the high-precision map. The map becomes more detailed, the location of sidewalks, crosswalks, and safety zones are easier to grasp, and the shape and width of the road become the scale. This information is helpful when using a wheelchair or stroller when pedestrians pass the minimal route. This feature will be available in more than 50 cities, including Berlin, by the end of 2021.

Next, visualize the congestion situation. Congested areas on the street are marked with different colors. This feature can be helpful when visiting a lively area to avoid congestion.

The last is the change of display information according to the situation. Information such as usage time or whether the user is traveling is emphasized in the most relevant location. For example, if you open the map at 8 a.m. on a weekday, you will see a nearby coffee shop, not a dinner spot. Also, if you touch anywhere, if it is a hamburger shop, relevant locations, such as other hamburger shops nearby, are highlighted. In other cases, tourist destinations are highlighted. These features are expected to be rolled out to Android and iOS within the next few months. Related information can be found here.

◇ How to improve skin color taken with a smartphone camera = At this event, Google announced that the smartphone image is for everyone and will improve its algorithm so that it can be built more accurately. It is easy to think that shooting with a camera is objective, but in reality, in the process of making a camera, the will and decisions of various people are reflected in the tool. It is said that the will of people of color is rarely included in this decision.

Therefore, Google carried out the project with the goal of showing the best results for all of its cameras and image products, including people with dark skin colors. In cooperation with experts who have taken thousands of photos so far, they have diversified the dataset and learned algorithms based on it. In addition, the accuracy of the automatic white balance and automatic exposure adjustment algorithm has been improved.

This technology will be reflected in the upcoming Pixel smartphone, and the learning will be shared with Android partners and the entire ecosystem.

◇ Building an integrated platform for smartwatches with Samsung Electronics = Google also announced the renewal of its smartwatch software platform, Wear OS by Google. It has also been revealed that as part of the Wear OS renewal, a new integrated platform will be established jointly with Samsung Electronics.

Google said that it will integrate the advantages of its Wear OS and Tizen into one platform, and that the two companies can work together to provide an environment in which you can use faster performance, long battery life, and use more applications. The integration with Tizen, which is used in the In Galaxy Watch series, has been made clear.

The two sides worked together to reduce app launch by up to 30% on the latest chipset and realize smooth UI motion and animation. In addition, by optimizing the operating system and utilizing a low-power hardware core, it has also realized the extension of the continuous use time of the smartwatch. Even if the heart rate sensor is continuously activated during the day and sleep is tracked at night, it has succeeded in extending the battery life to a level where the battery is still remaining the next morning.

Switching apps can be done simply by double tapping the side button, and a new feature called Tiles that collects only important information from the app allows you to access the information you want right away. Google Maps, Google Pay, YouTube Music, and other service apps provided by Google have also been significantly linked, and Fitbit, which was acquired by Google, has been added to the default app.

In the integrated platform, Google and Samsung Electronics, as well as all device manufacturers, add user experiences that are customized. That is, there may be more options. The new Wear OS will also release an API that makes development easier. Related information can be found here.

◇ Like a real face-to-face conversation, ‘ Magic Mirror ?’= Google has announced Project Starline, which develops a new communication tool that makes you feel like you’re talking face-to-face even in remote locations.

Google describes the Project Starline development tool as a magic mirror. You can talk naturally through this mirror, communicate through gestures, and make eye contact. As it is still in the middle of the development stage, Project Starline requires custom hardware and highly specialized equipment. However, it is said that some Google offices have already tested it and are undergoing internal testing.

Project Starline can communicate as if they were physically together. Three things were necessary for realization, but the first is a 3D imaging technology that captures people as they are. The next step is to efficiently transmit data through the existing network through real-time compression technology. The last is a rendering technology that faithfully reproduces the transmitted 3D image.

In addition, technologies such as computer vision, machine learning, and spatial audio are applied, and through this, communication in sign language is expected to be possible as if they had met directly.

Project Starline is developing a groundbreaking light field display system that can render 3D images with volume and depth without the need for additional devices such as smart glasses or headsets. In addition, it is said that not only the Google office, but also enterprise partners selected from some industries such as healthcare and media are demonstrating and collecting opinions on tools and applications. It is also planning to distribute trials to corporate partners in the second half of 2021. Google’s immediate goal is to make Project Starline cheaper and more accessible. Related information can be found here.

◇ When you take a picture, AI checks for skin diseases = In addition, Google announced that it has developed a web-based skin burn judgment tool that allows you to easily determine what is happening to your skin. This is not the first research on skin burn diagnosis by machine learning. A research team at Stanford University published an algorithm that identifies skin cancer in skin images in 2017.

The paper on the skin image verification tool released this time was published in the journal Nature in May 2002 by the Google development team. According to the paper, an AI system for evaluating skin diseases has achieved the same accuracy as a US-certified dermatologist. In addition, it is reported that doctors who are not specialists can diagnose skin with high precision using AI.

To use this web app, you need to take 3 shots of skin, hair, and nails from various angles using a smartphone camera. Next, answering various questions such as skin type and the date the problem occurred, the AI model analyzes the symptoms and displays reliable information. However, the tool does not make a diagnosis or give medical advice.

According to Google, AI was trained using skin case data diagnosed with more than 65,000 photos, millions of skin case images, and thousands of minutes of data so that the skin burn determination tool works with age, gender, race, and skin color. As a result, Google reports that it has been recognized as a Class I device under the EU Medical Device Regulations, a skin burn judgment tool, and has been recognized for attaching the CE mark. Google plans to launch this skin burn judgment tool on a trial basis in the second half of 2021. Related information can be found here.

◇ Conversation-specific AI that allows free conversation = Google also announced LaMDA, a conversation-specific AI that realizes natural conversation. Lambda is a conversation-specific AI that adopts a neural network architecture for language understanding called Transformers. Language models using the same transformer include Google BERT and OpenAI’s GPT-3, but they also support free-form conversations.

According to Google, human conversations tend to revolve around a specific topic, but the flow of conversations about TV programs continues to change from the country where the drama was filmed to the local cuisine of the country. It is difficult to follow the flow of human conversations for ordinary chatbots who tend to stay within predefined topics. However, Lambda (Language Model for Dialogue Applications) is said to be free to respond to an almost infinite number of topics. Related information can be found here.

◇ Android phone as a car key or TV remote control= Google also announced a function that uses a smartphone as a car key or a TV remote control.

As a function for linking devices newly installed on Android, first, a digital car key that can be used as a car key on an Android-equipped smartphone. A card-like shape is placed at the top of the screen, and the car model name is displayed at the bottom. The digital khaki communication standard uses NFC, and it can be opened and closed simply by bringing the smartphone closer to the car. The digital khaki is expected to be released in the fall of 2021, and will be supported by the Google Pixel series and the Samsung Galaxy series.

It also announced the ability to use a smartphone as a TV remote control. With this function, you can use your smartphone like a touchpad to operate the TV, or use your smartphone to enter text smoothly. The function of using a smartphone as a TV remote control is expected to be released in the second half of 2021, and it is said to work on more than 80 million devices equipped with Google TV and Android TV. Related information can be found here.

In addition, in the case of Smart Canvas, a Google Workspace update that was renamed in G Suite in 2020, it has announced that it will allow multiple people to work efficiently. In @mansion, a list of recommenders, files, and meetings is displayed, and there is no page border, so it is enlarged to fit the terminal and screen size, and pages can be divided when printing and printing PDF. Emoji reaction will be introduced in the coming months, language recommendations and writing support will be added, meeting templates to be added to Google Docs, checklists to link with other content, and table templates will be added. You can directly provide the contents of Google documents, spreadsheets, and slides while working on Google Meet, and add real-time subtitles and translation functions. It adds a timeline view to Google Spreadsheet and enhances the assist analysis function. You can also switch directly from Google chat discussions to content creation. Related information can be found here.