Triton, a programming language for open-source neural networks, has been unveiled to enable productivity and fast coding beyond CUDA, a general-purpose parallel computing platform for GPUs developed and provided by Nvidia. It is a language compiler for creating efficient deep learning primitives and is open to the GitHub development repository.

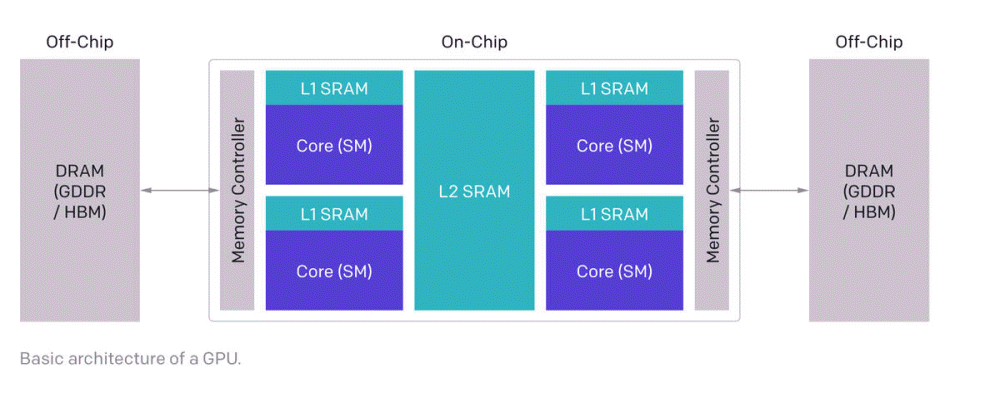

Released by OpenAI, an AI research non-profit organization, version 1.0 was released as a programming language for open source neural networks based on Python. The reason OpenAI developed Triton as a programming language to replace CUDA is simple. Nvidia graphics processing units, including CUDA, are too difficult to program. Specifically, when creating GPU native kernels and functions, it is necessary to move allocated data and instructions in the multi-core GPU memory hierarchy, making programming complicated.

According to OpenAI, Triton allows even research that has dealt with CUDA to write GPU code that is as efficient as GPU coders. For example, it is possible to create an FP16 kernel comparable to cuBLAS performance in less than 25 lines of code, which is a level of performance that most GPU programmers cannot realize.

The goal is to create a CUDA executable alternative for deep learning, and the Triton target is for machine learning engineers and researchers who have excellent software engineering skills but are not familiar with GPU programming. The OpenAI researcher appealed that Triton was already being used and that he was succeeding in creating a kernel that was up to twice as efficient as PyTorch programming.

With Triton, developers write code in Python using a dedicated library and JIT-compile it to run on the GPU. This will allow integration with the rest of the Python ecosystem for developing machine learning solutions.

Triton library provides a set of basics reminiscent of NumPy, and points out the similarity to Nympy. Specifically, it provides a function that performs matrix operation or array reduction based on a certain criterion. It is also evaluated that it is similar to Numba in that it integrates primitives with its own code and compiles it to run on the GPU.

Triton is based on a paper published in 2019, but it is a project that has just begun. Currently, it is only available on Linux, and the documentation provided is minimal, so developers who want to use it early will need to carefully research the sources and examples. Related information can be found here.