Silicon Valley chip manufacturing startup Cerebras Systems announced on August 24 (local time) that Cerebras CS-2, a small refrigerator-sized deep learning system equipped with a giant chip using a single silicon wafer ) was published.

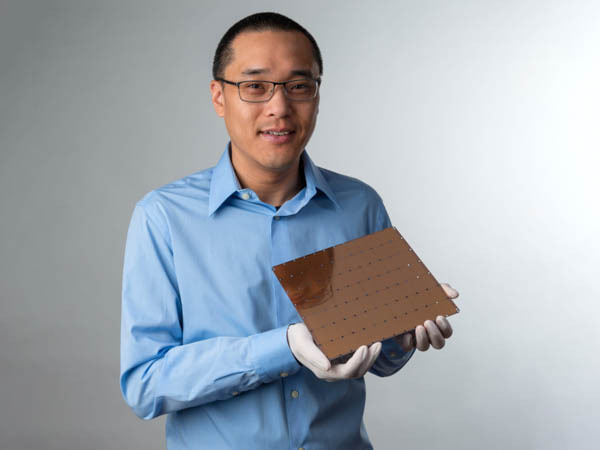

Founded in 2016, Celebras Systems, a semiconductor manufacturer, is an artificial intelligence system development company headquartered in Silicon Valley. In 2019, it announced the Wafer Scale Engine (WSE), a chip measuring 20 × 22 cm. In addition, Cellebras CS-1, a deep learning system equipped with WSE, is said to have produced verification results that exceed the laws of physics in simulation.

Celebras CS-2 is the WSE2 equipped with 2.6 trillion transistors announced by Celebras Systems this time. According to the announcement, Cerebras Weight Streaming, which decouples compute units and parameters from storage, simplifies workload distribution models and allows CS-2 to scale from 1 to 192 units, up to 2.4PB Cerebras MemoryX, a memory expansion technology that can support high-performance memory and models with 120 trillion parameters, and Celebras Swarm that can connect 163 million AI-optimized cores across up to 192 CS-2s It is said to include four new technologies: Cerebras SwarmX (X), Selectable Sparsity, where weights can be dynamically selected.

The fact that the Celebras CS-2 draws attention is that the power efficiency is dropping even with the addition of a chip. Neural networks currently used in AI cannot be processed with one chip, so they are generally distributed with multiple chips. However, the more chips there are, the less power efficient it is, so a huge amount of power was required to increase the overall processing power of the deep learning system.

On the other hand, the power efficiency of Celebras CS-2 does not change even if the number of chips is increased. As a result, the Cellebras CS02 can be expanded to 192 while maintaining power efficiency, connecting 163 million cores to one, and training a neural network with 120 trillion parameters than the human brain.

According to Cellebras Systems, a large-scale network such as GPT-3 allows the unimaginable until now, and the natural language processing NLP situation is changing. He emphasized that a neural network with 120 trillion parameters has been realized. Related information can be found here.