In the TOP500 announcement of the global supercomputer rankings at the end of June, there were five years of change. The United States led the way, with IBM ranking as the world’s fastest supercomputer Summit, topping the rankings of China that had dominated the supercomputer rankings for five years.

At the time of the announcement, the first place was the Summit. The 122.3 petaflop performance is designed by IBM and NVIDIA and is owned by the Oak Ridge National Laboratory.

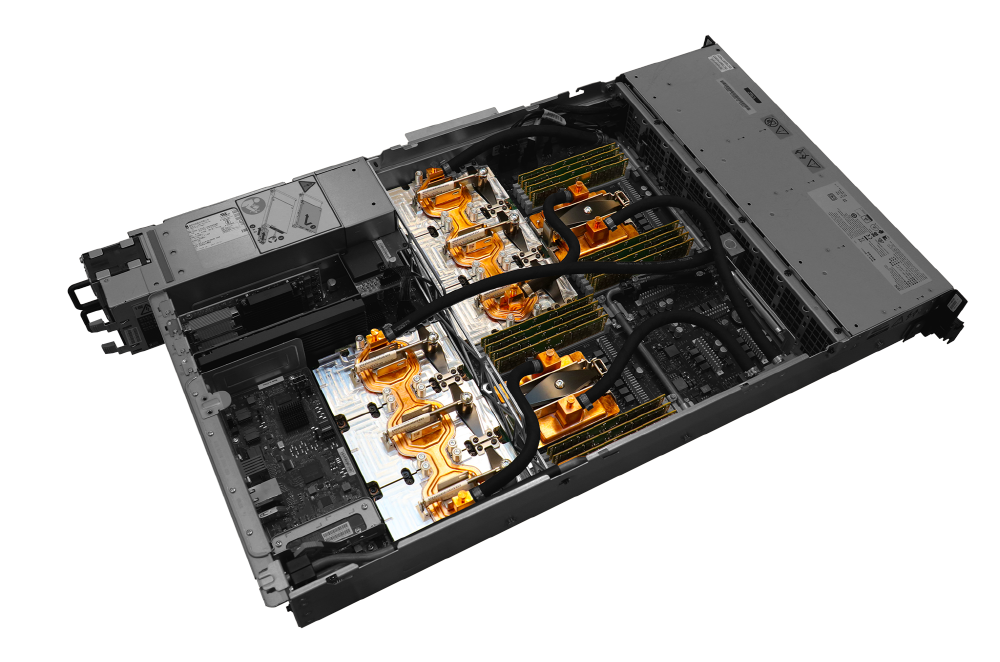

The summit is a hot supercomputer announced on June 8th (local time). At the time of publication, the data show that 200 petaflops, which means 200,000 views per second, has tremendous computing power. As I mentioned earlier, this supercomputer was designed by IBM and NVIDIA. The supercomputer, which has an area of two tennis courts, consumes 13 megawatts of full power. It also uses 15,000 liters of cooling water for its cooling, and there is also a dedicated cooling system for it.

As mentioned earlier, the summit’s processing capacity is 200 petaflops. One petaflops means that you can have 1,000 floating-point operations per second. So the Summit has a floating point performance of 200 × 1,000 views. It is eight times the performance of Titan, which is the highest US product in the existing supercomputer rankings.

The Summit has 4,608 servers. Each server has two IBM Power 9 CPUs running at 3.1GHz and an NVIDIA Tesla V100 GPU. For the system as a whole, we use 27,648 GPUs. IBM and NVIDIA chips were connected to NVLink, which is 10 times faster than PCI Express. In addition, the total system memory is over 10 petabytes. Of course, the merits of the Summit are not only in this vastness. It has more efficient processing power than existing supercomputers. It can handle 15 gigaflops per watt of power consumption.

Summit is a supercomputer designed mainly for artificial intelligence related processing such as machine learning and neural network. The Summit has a hardware configuration optimized for artificial intelligence processing, allowing you to speed up the analysis and processing of large data sets and data sets. It can help intelligent services and software development. Not only that, but it also has enormous processing power for cancer research and nuclear fusion.

The second place is Sunway Taihu Light, developed by China National Center for Parallel Computing Technology (NRCPC). The supercomputer has a performance of 93 petaflops and has been in the top spot for two years from 2016 to 2017.

Third place is Sierra again. Sierra, used by Lawrence Livermore National Laboratory, was developed by IBM and has a performance of 71.6 petaflops. The architecture itself is similar to the Summit, with a Power 9 CPU and an NVIDIA Tesla V100 GPU, with 4,320 nodes.

Tianhe-2A (Tianhe-2A), developed by China’s National Defense Science and Technology University, is capable of handling 33.86 petaflops, while Tianhe 2 is the top-ranked company in 2013 Tenher 2 was built with the Xeon Pi processor, but in the case of Tenacity 2A, it used the Matrix 2000, which developed its own accelerator.

Finally, the fifth place is ABCI (AI Bridging Cloud Infrastructure) in Japan. It is a supercomputer developed by Fuchizu for artificial intelligence processing. Featuring 19.9 petaflops, the Xeon Gold 20 core with the NVIDIA Tesla V100 GPU.

By this time, the summit and Sierra appeared at the top of China’s monopoly position over the last few years, and America was again named. Of course, the overall ranking is 124 in the United States and 206 in China. Considering that Japan has 36, 22 Britain, 21 Germany, and 18 France, it is said that the US war on the world of supercomputers is already going away from the trade war.

Because of this fierceness, the combined performance of the supercomputers in the TOP500 ranking can reach 1.22 exaflops. For the first time Beyond Exaflops.

According to the change in supercomputer rankings, the overall score in China is still superior to that of China, but in terms of qualitative aspect, it is ranked first in the five-year period. . Next is artificial intelligence. As you can see from the supercomputer, supercomputers such as Summit, Sierra, and Japan ABCI are all designed for artificial intelligence processing.

That’s why it works. As GPUs become more important in artificial intelligence-related areas where there is a lot of data processing, another important point in the supercomputer performance competition is that the importance of GPU is growing.

In fact, the US supercomputer, which quickly put its name on the top of the list, is all designed to weigh the GPU. NVIDIA’s Tesla is the biggest beneficiary. In fact, more than half of the new additions to the TOP500 are attributed to the Tesla GPU.

Let’s look at the summit. There are six Tesla V100s and 27,648 GPUs, as I said earlier. 95% of the total computation is done by the GPU. The Sierra architecture, the same architecture, is different in that it also uses four Tesla V100s. The ABCI developed by Fujitsu of Japan is also the Tesla V100 4 individual.

As mentioned above, the change in the TOP500 ranking recently means a trend of more than just ranking competition between the US and China, that is, system conversion focused on high performance and artificial intelligence computing. For this reason, once you’re focused on CPU computing, you can say that the GPU-focused configuration is changing trends. Summit with Tesla V100, Sierra, and ABCI 3 supercomputers, combined with the deep-run computation capabilities combined, make it possible to exceed the deep run of the remaining 497 supercomputers. In this regard, changes in the supercomputer rankings are suggestive of various trends in this market.