In June 2018, Google received protests from employees involved in the US Department of Defense (AI) use project and issued guidelines for AI use. Kent Walker, senior vice president of Google Global Operations, said in an official blog on March 26, “We will set up an external advisory committee to supplement this principle with internal corporate governance and processes.”

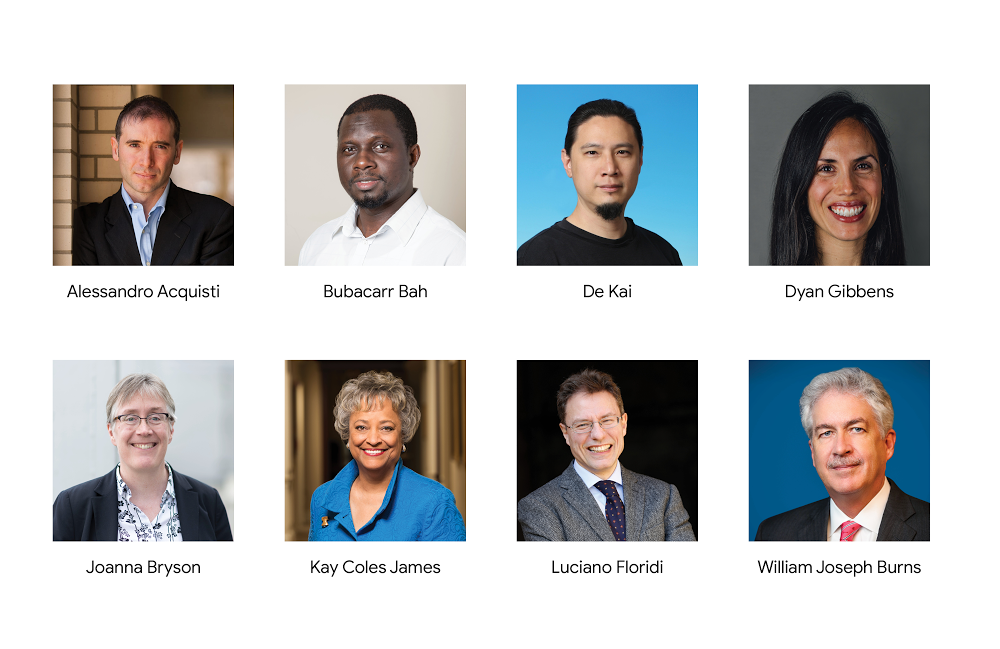

The Advisory Committee aims to provide a variety of perspectives on Google’s work while reviewing complex issues that arise from AI principles such as face recognition and machine learning equity. Members of the advisory committee were named by a variety of intellectuals, including machine learning researchers, digital ethics, and foreign policy specialists. Alessandro Acquisti, behavior economist; De Kai, natural language processing and machine learning researcher; Dyan Gibbens, an expert in industrial engineering and drones systems; Kay Coles James, a public policy expert; , And diplomat William Joseph Burns.

They are scheduled to hold four meetings with ATEAC commissioners by the end of the year. He plans to participate in the actual Google development process and present a report that summarizes the discussions. “Responsible AI development recognizes that many people will be interested in a variety of areas,” Walker said. “We will talk with ATEAC experts, as well as partners and organizations around the world.” I will listen to various voices as well as the advisory committee.

Google’s announcement of its AI Usage Ethics Guidelines is being circulated by dozens of protesters and quitters as it is known that they are part of the US Department of Defense’s Project Maven. Although the contract amount of the military project is large, the fundamentals of the company are in excellent talents and brains. In order to remain a high-tech company, it may be necessary to keep the principle of not using AI’s weapons, and to keep Google’s original do not evil principle. For more information, please click here .