Facebook AI Research announces Vid2Play, an AI study that allows players to manipulate images of real-life sports using a joystick like a video game.

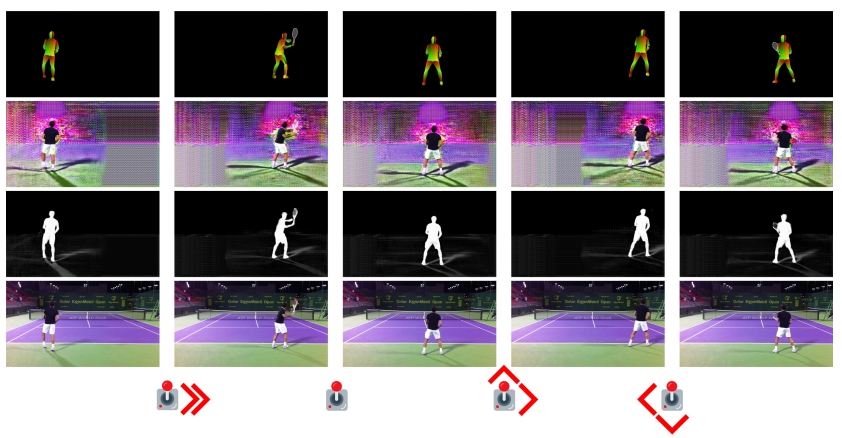

According to the presentation, the researchers used two neural networks: Pose2Pose and Pose2Frame. The former is trained for specific types of work such as dancing, tennis, and fencing, and generates posture data that can be manipulated after separating the movement of the character in the background. This next attitude data is processed by pause to frame. It inserts the character body, shadow, and reflection elements in the background and outputs images such as manipulating the actual player with the keyboard or joystick.

Neural network training usually involves a huge amount of data. However, the material used here is a tennis player playing in the outdoors, a fencing indoor game, and three walking images, each of which is five to eight minutes shorter. With these samples, it is possible to realize something like a character game with a real-time capture that took a long time using expensive motion capture equipment or facilities.

The purpose of the team is to support game development. To open a way to create video games with realistic graphics. Also, extracting characters that can be manipulated in videos like Youtube can be useful for virtual reality or augmented reality. It can also help develop games for Facebook’s Oculus lift.

Now the movement of the character is smooth, but somewhat distant from reality and the movement of the instep slipping on the floor, etc. Moreover, the limb movement range is limited. For more information, please click here .