A technology called the 3D Ken Burns effect was developed that uses a neural network to create an image that looks as if the position of the point of time the camera is moving has changed with one picture.

Using this effect, an image as if the camera is approaching from the original image is created. Unlike simple image magnification, the background is not magnified by distant objects, while nearby objects are remarkably magnified. In addition, the height of the camera changes in three-dimensional space.

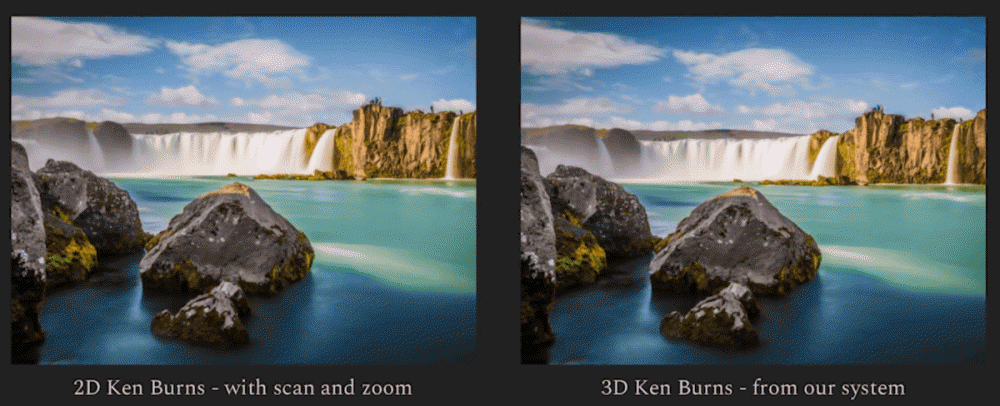

If you compare 2D Ken Burns, which is a simple magnification effect, and 3D Ken Burns, you can see that it is a simple image magnification because 2D expands to the background. On the other hand, in 3D, the position of the background is almost unchanged, and the object in front is enlarged and it appears to change to the height of the viewpoint, so it looks like an image taken with the drone back in aerial photography. The time required to apply the 3D effect is a few seconds. Using this effect, you can create a video that looks like a camera is approaching.

The 3D Kenburn effect magnifies the foreground larger than the background using the perspective principle. The research team used 32 virtual 3D spaces released on the Unreal Engine 4 Marketplace to shoot 134,041 scenes such as indoors, downtown, suburbs, and nature, and 3 types (VGG-19, Mask R-CNN, Refinement Network). It was placed on a neural network to learn about the depth of objects in the image.

The three types of neural networks are able to know the image point group and the depth of each point as a result of training. The 3D Kenburn effect calculates the magnification of an object separately from an image that appears to have moved the camera at the depth value. In addition, because there are cases where objects are cracked or distorted during the enlargement process, the research team developed a correction system that can be automatically applied according to the situation.

Simon Nicholas, the lead author of the thesis, majored in computer vision and deep learning at Portland State University, and wrote this paper during an internship at Adobe Research, an Adobe-affiliated research institute. The co-author is also an Adobe researcher. They are reviewing the release of the 3D Kenburn effect code and dataset, but have not obtained Adobe approval. Related information can be found here .