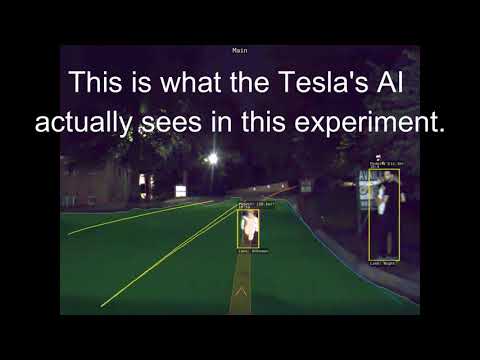

Tesla self-driving vehicles that run while neural networks detect signal colors or obstacles use various cameras instead of LiDAR. However, it is said that they have discovered that people or traffic signals on the streets or trees on the side of the road are deceived by the projector.

The study was conducted at the Cyber Security Research Center at Ben Gurion University in the Negev Desert, Israel. Here, it is reported that there is a possibility that a vehicle that misrepresents the investigated ghost image as a human may suddenly brake.

This study was called the Phantom of the ADAS. ADAS (Advanced driver-assistance systems) refers to advanced driving assistance systems. In the study, the mobileye 630 PRO was mistaken for a non-existent person or lane. It is said that a malicious attack could lead to an accident by being deceived even by the image that the drone investigated for only 125 milliseconds.

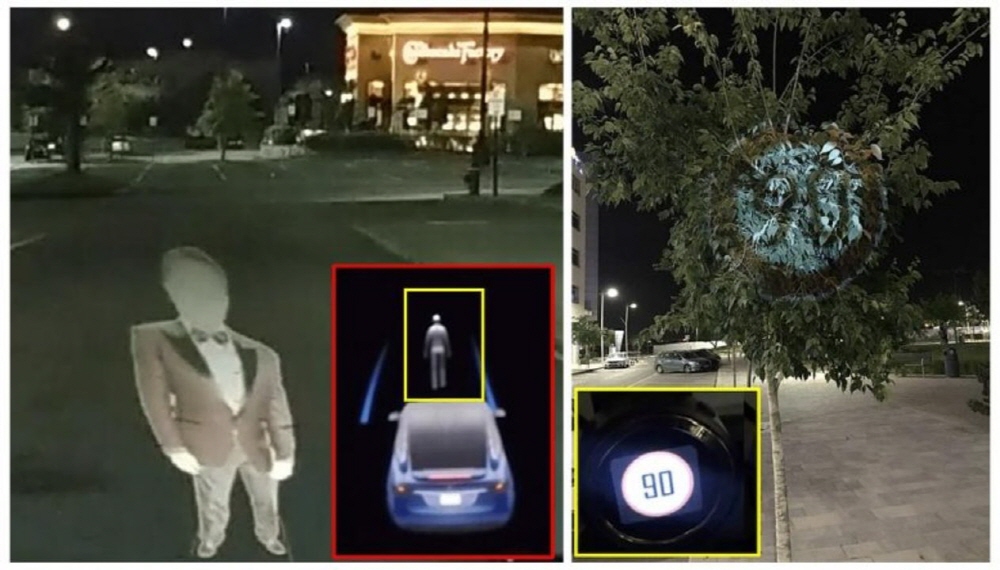

The team argues that this is not a bug or a weak coding error, but it is a machine flaw that has not been trained to distinguish between real and fake. In addition, although it appears in the video, the Mobile Eye 630 Pro also has the characteristic of recognizing road signs longer than 16cm. However, if the vehicle is equipped with a lidar that Elon Musk dislikes (?), it is possible to think whether it is possible to judge the authenticity by measuring the distance to the entity.

In large cities, there are neons, electronic signs, and huge monitors, and store logos are also irradiated toward the ground. There is a possibility of misunderstanding this, and if several conditions accidentally overlap, the vehicle may detect a person like a hologram. Previously, McAfee had succeeded in tricking a Tesla car into speeding by flicking a black tape over a speed limit sign. This has led to improvements in Tesla’s vehicle system, but it also suggests the possibility that autonomous driving will cause problems. It’s still a new technology, so be careful. Related information can be found here .