Clip (CLIP), an image recognition AI newly developed by OpenAI, a non-profit organization that develops GPT-3, an AI that generates accurate sentences, and DALLE, an AI that generates images from sentences. I am explaining.

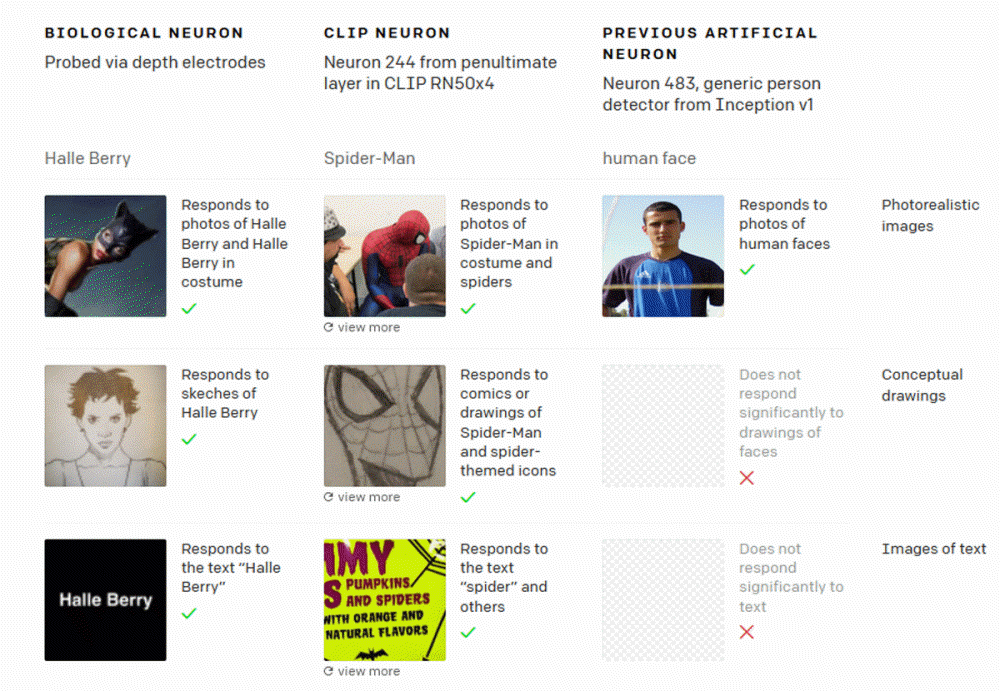

In humans, it is known that there are neurons that respond uniformly to various types of information, such as neurons that respond even to the text of Halle Berry even if they look at photos or illustrations of actress Halle Berry. According to OpenAI, clips can handle various types of information in the same way as humans.

Existing image recognition models that recognize human faces as human faces do not respond to human face illustrations and texts written as human faces. However, the clip can handle Spider-Man cosplay photos and illustrations, and the string Spider-Man in the same way.

Clips also recognize images by multiplying other image features. When recognizing a piggy bank, for example, the clip is perceived as multiplying the economy with other factors such as dolls and toys. In addition, the clip is also subtracting elements. For example, the expression of surprise is recognized with expressions of blessing, hug, shock, and smile, but the expression of intimacy is recognized with the element of disease in the combination of expressions of a soft smile.

Open AI also explains the weaknesses of the clip. For example, an image of a standard poodle can be accurately recognized as a standard poodle, but if you put multiple $ marks on the image, it will be recognized as a piggy bank. In addition, it is the fact that the clip has excellent handwriting recognition, such as some cases where an apple pasted on a paper written as an iPad is recognized as an iPad. Open AI will disclose the tools used for clip analysis, and will continue to solve problems by conducting research related to clips in the future. Related information can be found here.