ANYmal is a four-legged walking robot developed by the Institute of Robotics, Zurich University. According to a new paper published in Science Robotics, the robot has a system that trains to move faster than before, and it can be trained to get up and train on its own.

Animal is a robot that looks like a dog. It was originally made by ANYbotics, a company established in 2016, for search and rescue. It is designed to be able to move even in bad traffic situations such as forests and snowy places by waterproofing processing. You can go to dangerous places that humans can not go to, including search missions. Of course, the robot itself still has a lot of room for improvement.

The Zurich University research team chose reinforcement learning, a type of machine learning, as a potential solution. Robots can train themselves by trial and error to find the best way to do work such as walking. Robots with reinforcement learning can learn themselves as if they were animals. Of course, robots with real legs are not easy to apply reinforcement learning because of the complexity of their movements. So far, I have mainly been doing computer simulation for robot learning. The problem is that it is not easy to apply simulation data to real robots.

The research team has developed a neural network that makes it easier than ever to apply simulation data to robots. As a result, we were able to apply the simulation nearly 1,000 times faster than the actual application. We do not need computing power for similar system realization because we only need PC for simulation.

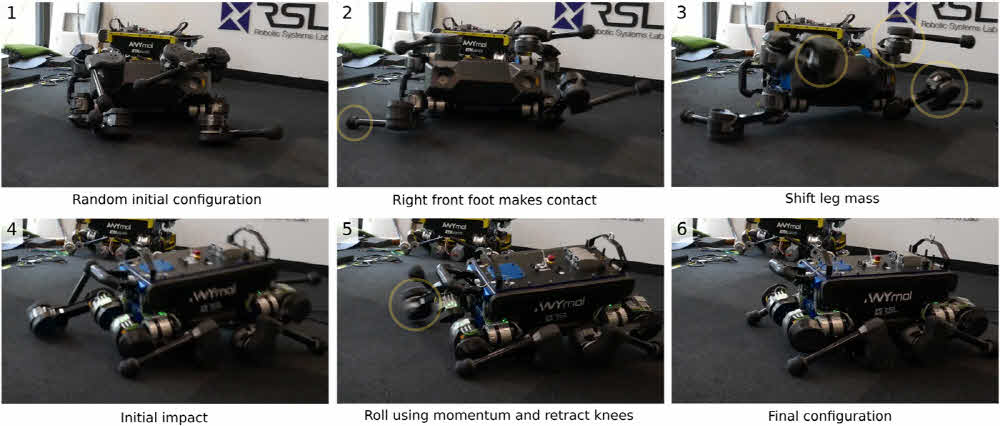

Newly trained animals are faster, more efficient, and have more than 25% more walking records. The ability to stand up to self-power was not seen in other quadruped robots with similar complexity.

The researchers believe that this technology can be applied to other robots. However, it will require additional training to be applied to various environments. The paper presents only flat landforms, but if it is a hilly terrain, it should process additional sensors and corresponding policy information. For more information, please click here .

Add comment