The AI sentence creation machine GPT-2, developed by OpenAI, a non-profit organization that researches artificial intelligence, was able to automatically generate high-precision sentences, so the development team expressed concern that it was too dangerous, and the publication of the paper was delayed. . However, a technology that automatically generates images by directly applying the architecture used for GPT-2 learning is being developed.

The technique of transferring a model trained in a specific domain to another domain is called transfer learning. GPT-2 has had great success with this transfer learning model. In addition to GPT-2, which generates sentences without human involvement, it has made significant progress with Google BERT and Facebook RoBERTa. On the other hand, in the field of natural language, the success of transfer learning models is surprising, but until now, powerful functions for transfer learning models have not been created from images.

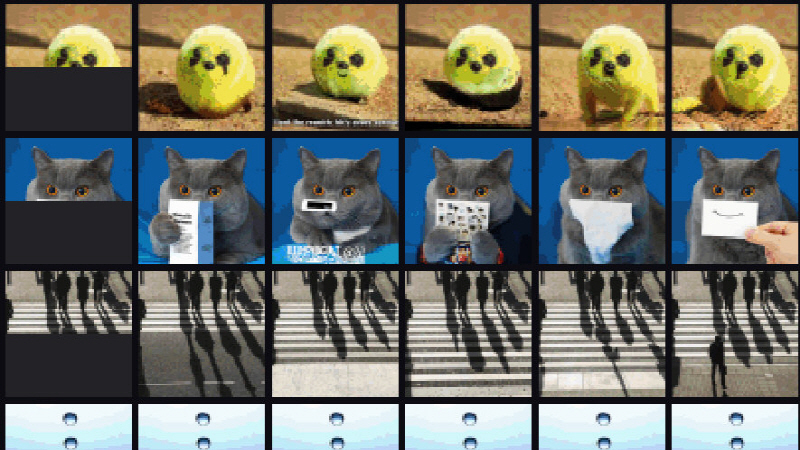

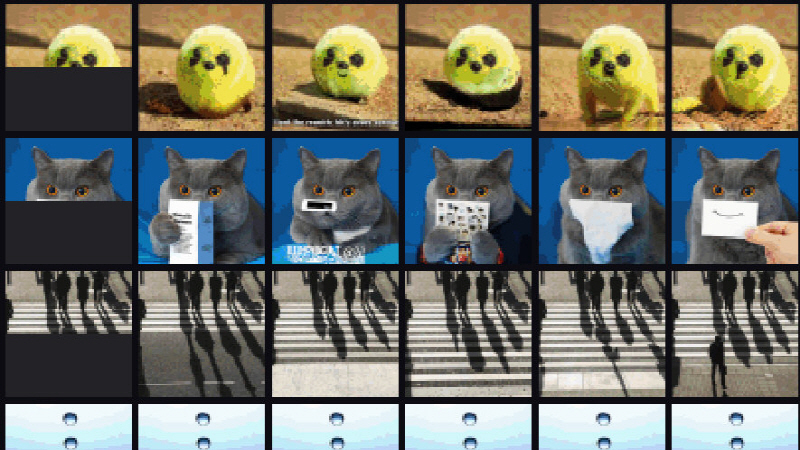

OpenAI has attempted to train the pixel sequence to be consistent with the image with a new sample of a transfer learning model such as GPT-2 and a finished product. This is a technique in which a model completes an image just by giving half an image by a human.

OpenAI trained three versions of GPT-2 models on ImageNet: iGPT-S with 76 million parameters, iGPT-M with 455 million parameters, and iGPT-L with 1.4 billion parameters. At the same time, iGPT-XL with 6.8 billion parameters was trained using ImageNet and Internet images. Each model reduced the image resolution, created a unique 9-bit palette representing pixels, and produced an input sequence that was three times shorter than the standard RGB spectral standard without compromising accuracy.

According to OpenAI, the model scale was expanded and more iterative training was performed to improve the image quality, resulting in good results in the benchmark. However, the iGPT model has limitations, such as that low-quality images are generated or training data is biased, so it functions as a proof-of-concept demo. The research team explains that the findings are a small but important step in bridging the gap between computer vision and language comprehension skills. Related information can be found here .

Add comment