Google announced that it has improved the Crawl Stats report to better understand the crawler Googlebot, which operates to collect various statistical information on websites.

Google operates a crawler Googlebot that mechanically acquires web documents or images and converts them into a database in order to identify numerous websites around the world. The Googlebot accumulates data about the webpage in the Google database, just like a user searches for web content, after viewing a webpage and following a page link.

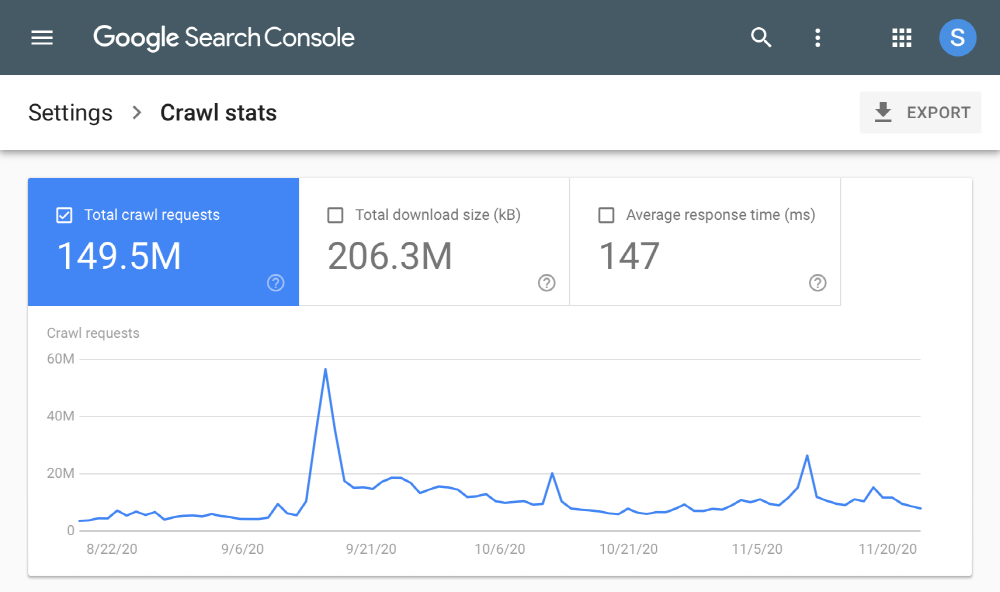

If you run your own site, you can see the statistics obtained by Googlebot in the crawl statistics report so far. The content announced on November 24, 2020 is to improve the crawl statistics. In the future, the total number of requests grouped by response code, the type of file crawled, the purpose of crawling, the type of Googlebot, as well as detailed information about the host status, or requests from the site. Not only can the generated URLs be viewed in the crawl statistics report, but it also supports domain registration with multiple subdomains in one place.

Among these improvements, Over-time charts show changes in the total number of requests, average download size, and average response time. Grouped crawl data is a new function that allows you to view statistical data such as the type or format of the acquired URL. For high level & detailed information on host status issues, you can check the error log for 90 days. In the case of a domain attribute in which multiple hosts are grouped, you can also check the status per host. Related information can be found here .

Add comment