An algorithm has been developed that can reduce the generation of sound effects for virtual reality, which used to take hours to compute, to just a few seconds. It was announced during the Siggraph 2019 held in Los Angeles, USA from July 28 to August 1.

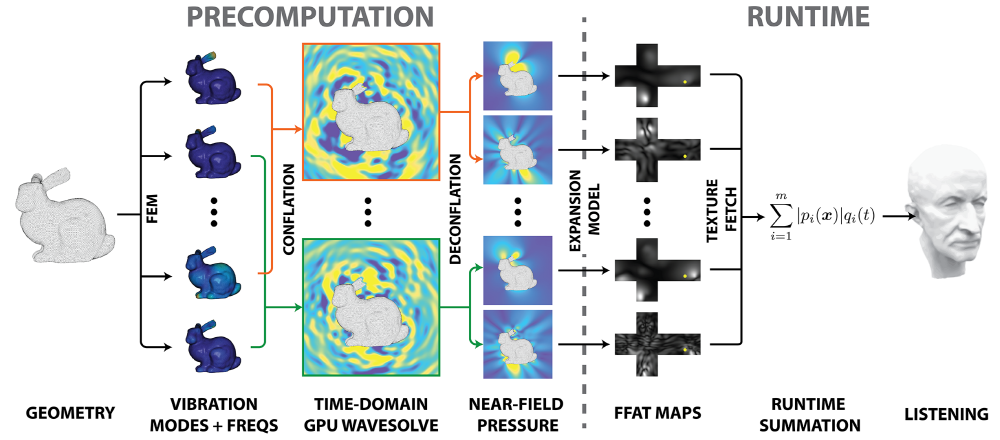

It was developed by a research team at Stanford University, and in movies and games, it gives a three-dimensional effect through sound movement or volume control between three-dimensional sound speakers. However, in an interactive VR environment, it is difficult to predict where the object is and where the listener is. It can take a lot of time just to create a sound model that realizes realistic sound. However, the research team invented an algorithm that could create a sound model like this in seconds.

This model synthesizes sound as realistic as the slow algorithm of the past. It is explained that it is practical for building an interactive environment with realistic sound effects by promoting model creation. Existing sound modeling algorithms are based on the theory and boundary element method of 19th century scientist Hermann Ludwig Ferdinand von Helmholtz. It was an expensive method for commercial use.

The algorithm developed by the research team was inspired by 20th-century Austrian composer Fritz Heinrich Klein’s method of mixing many piano sounds into one sound. Following this, the algorithm was named KleinPAT.

The point is that it is an innovation that has the potential to dramatically reduce the cost of producing realistic sounds for virtual reality content. In this regard, we will evaluate this algorithm as a game changer in an interactive environment. Related information can be found here .

Add comment