Even if it is a high-end smartphone, camera performance is difficult to match with expensive digital cameras. However, smartphones have the weapon of being an excellent processor. It means that the photos taken can be processed on the spot. For example, in the case of the iPhone, you can change the lighting virtually after taking a picture in portrait mode.

Of course, the use of portrait mode is still limited to flagship models among iPhones. For this reason, a team of researchers at Google and the University of California, San Diego, has developed a technology that will enable similar functions to be realized on more ordinary smartphones. Depending on the usage, it may be more convenient than portrait mode.

IPhone portrait mode takes several photos of the same scene with multiple cameras on the main unit and analyzes them with software to create an image depth (depth) map. The depth map is simply a black and white representation of how far away a subject in a picture is from an individual camera. This allows the software to determine how far ahead what is and how far inside what is. Thanks to this, it can be adjusted by focusing on the human eye and blurring the background.

Depth maps are also used to distinguish human faces in the iPhone portrait function. Photos taken in portrait mode can also be converted to virtual studio lighting or stage lighting later. Such lighting is completely fake, but it looks natural because information about the face is transmitted to the software through the depth map.

What the research team developed was to learn how a photograph of a human face would appear in various lighting conditions through neural networks. The person on the stage was surrounded like a ball with 304 LED lights, and the lighting lights were delayed one by one, while the camera was installed in 7 directions. It is said that if there are many pictures taken with 3 to 5 pattern expressions for each person, it is said that more than 10,000 copies per person.

Also, the model person prepared several patterns and used them as training data for 18 people, including 7 white men, 7 Asian men, 2 white women, 1 Asian woman, and 1 African woman. The research team said that the training data was manually adjusted so that it could be applied to photos of people with dark skin.

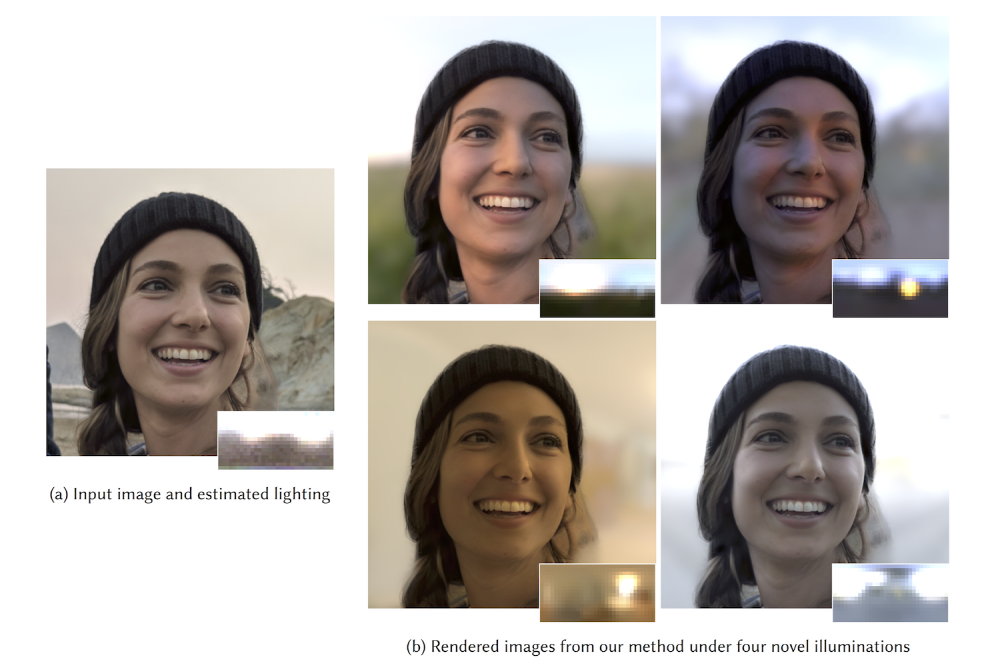

By learning the data of 18 people created in this way, adding 3 to 5 facial expressions, 307 light sources, and 7 camera angles, all lighting environments can be reproduced on the face in a photo without a depth map. In fact, when you look at the video, it is quite natural when you see the change in the photo.

For reference, in iOS portrait mode, there are only 6 patterns in lighting mode. However, the method the research team has achieved is that no matter where the light source is placed in the 3D space, the situation such as the color of the subject’s face and the number of shadows varies depending on the location of the virtual light source. In this sense, a much more flexible application may be possible.

The research team says that if this technology is a 640 × 640 pixel image, it can be processed in 160 ms, so it can be viewed in real time on a smartphone screen. However, for the latest smartphones, there are some 12 million pixels per image, which is 30 times more than 640×640 by simple calculation. The first process only takes nearly 5 seconds. It can be said that it is a remaining task to put such heavy processing into the implementation. Related information can be found here .

Add comment