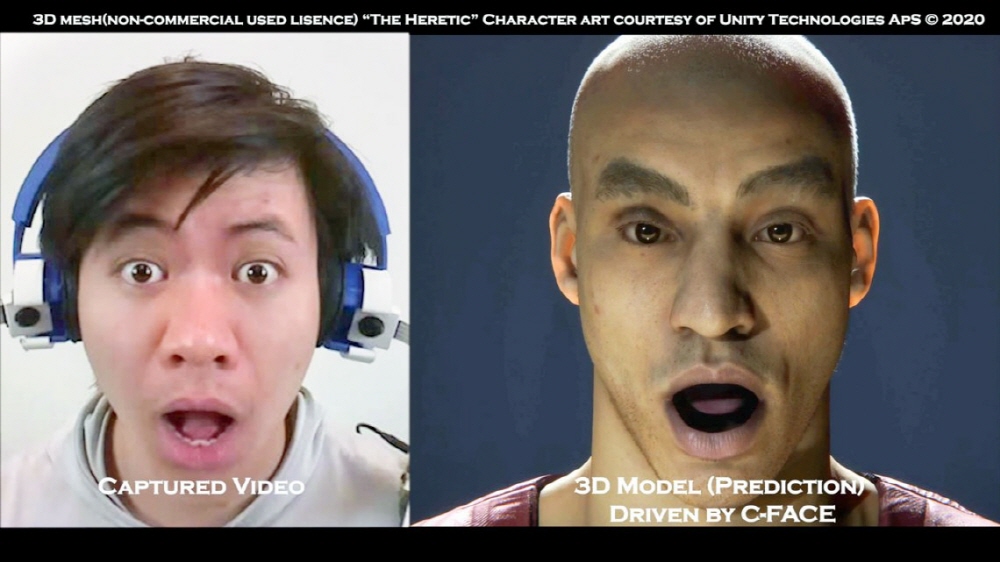

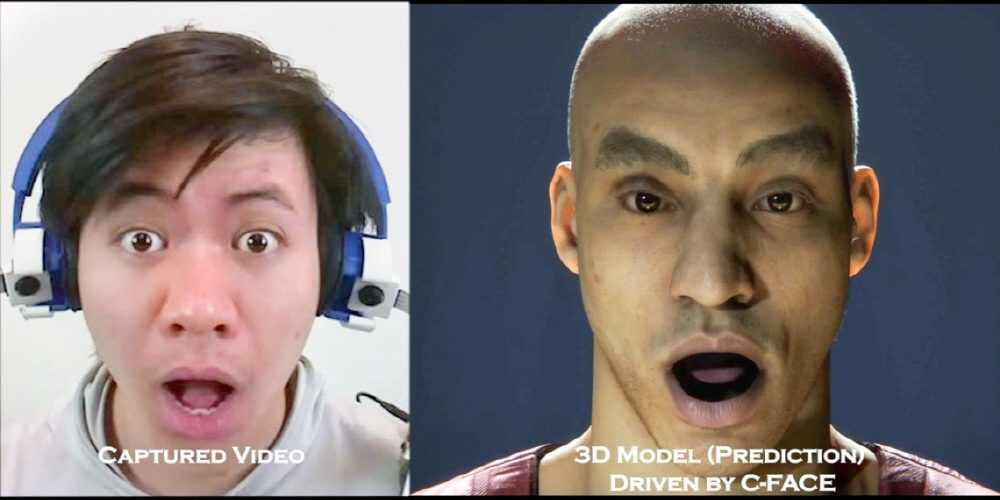

Cornell University’s research team developed the C-Face, a headphone that recognizes the wearer’s facial expressions and expresses them on a computer screen.

With these headphones, you can display your facial expressions in real time by replacing them with other people’s faces that you have previously selected. Video calls are also possible without using a web camera. In addition, social media using virtual reality space replaces avatar facial expressions with user facial expressions, enabling more realistic communication.

Cornell University’s research team explains that the device is simpler, more inconspicuous and has the ability to track facial expressions higher than conventional headset facial recognition devices. For example, when wearing a mask during Corona 19, facial recognition can convey facial expressions with a camera image. The C-Pace automatically creates a face by detecting the movement of the wearer’s cheek muscles with a camera mounted on the left and right units of the headphones.

This technology uses deep learning and neural networks to convert information collected from camera images into 42-point movements on the face that creates all expressions. The result obtained in the experiment is a reproduction of the wearer’s expression with an accuracy of 85% or more. The problem is that the battery capacity to drive the camera is limited. The research team is considering applying a more efficient battery.

When making a video call, such as zoom and facetime, if the other person’s voice comes out of the speaker, an echo may occur around the microphone. In addition, if you can create your own faces and expressions with a computer without using a camera and communicate with the other person, for example, if you are a woman, you can save the makeup effort and have the advantage of not notifying the other person of your indoor appearance. If video calls and conferencing become popular in the future, technologies like C-Pace may become more popular. Related information can be found here .

Add comment