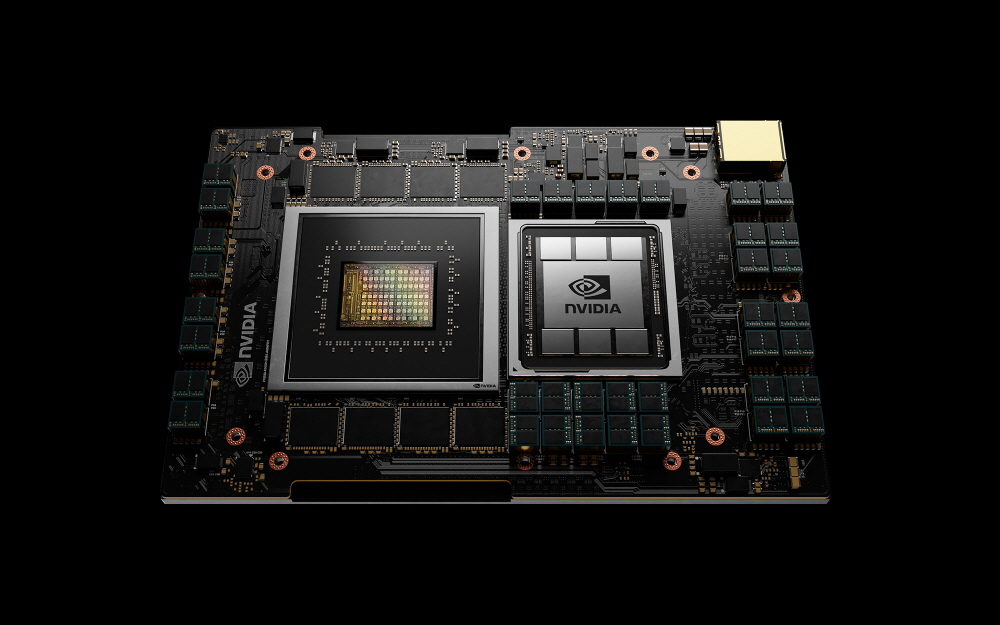

Just as Apple developed the M1 chip, Nvidia announced its own ARM-based CPU, Grace. The company’s first data center CPU targets large-scale data processing such as AI super computing and natural language processing.

According to Nvidia, the system equipped with Grace handles learning a natural language processing NLP model with 1 trillion parameters at 10x speed compared to the x86-based DGXTM system. The NVLink interconnect technology supports this performance improvement. Through the application of this technology, it provides 900GB/sec throughput between Grace CPU and NVIDIA GPU, which is said to be 30 times the speed of current major servers. In addition, by adopting LPDDR5x memory, Grace system energy efficiency is improved by 10 times and DDR4 RAM is doubled in bandwidth.

Grace will be hired by CSCS at the Swiss National Supercomputing Center and the Los Alamos National Research Center in the United States. Both facilities are expected to release systems equipped with Grace in 2023, and by this time, systems that adopt Grace are expected to spread to other customers.

Nvidia also announced a partnership with AWS (Amazon Web Servies). NVIDIA GPU announced along with Graviton2, an ARM-based CPU in AMW. AWS instances with NVIDIA GPUs can not only play Android games natively, but also stream games to mobile devices, render and encode faster.

In addition, NVIDIA has released a developer kit for ARM-based HPC. This kit is equipped with ARM Neoverse 80 cores, an ARM server CPU, 2 NVIDIA A100 GPUs, advanced network and security, and storage speed NVIDIA BlueField-2 DPU.

The energy-efficient ARM architecture is known to work well with data centers that consume huge amounts of power. Following the appearance of the Apple M1 in the consumer PC field and the emergence of ARM-based products in the data center, it can be said that it is a new threat to Intel, whose main focus is on the x86 architecture. Related information can be found here.

Add comment