Google announced the Quantum AI campus, a new research hub for quantum computers, during its online event, Google I/O 2021, held from May 18-20. The campus, which will be installed in Santa Barbara, California, USA, is expected to become the center of quantum computer research in the future with the addition of the first quantum data center or quantum chip manufacturing facility developed by the quantum computer laboratory.

Eric Lucero, who is in charge of the Quantum AI engineering team, Google’s quantum computer research team, said in an official blog that Google aims to develop a practical, error-correctable quantum computer within 10 years. It is clear that the Quantum AI Campus, a research hub for this, was established in Santa Barbara, California.

The number of quantum computers that can correct errors that Google aims to overcome is the problem that quantum bits are exposed to noise, making it impossible to perform accurate calculations. In realization, it is necessary to physically generate 1 million qubits and put them in a quantum computer that fits all in one room, but currently only 100 qubits or less are practical.

Ultimately, Google intends to build the world’s first quantum transistor to build a quantum computer capable of paralleling hundreds to thousands of and correcting errors. When the error-correctable quantum computer is put into practical use, molecular behavior and interaction can be simulated, which is expected to accelerate research in various fields such as battery improvement, energy-efficient fertilizer development, new drug discovery, and unprecedented AI architecture. Related information can be found here.

Google also announced TPU v4, a 4th generation model of the TPU (Tensor Processing Unit), a dedicated processor specialized for machine learning during this I/O 2021 period. The new processor has already been introduced into Google’s data center and will be made available to Google Cloud users in late 2021.

In recent years, AI has played an important role in various fields, but training a large amount of data requires tremendous computational processing, so it is important to increase productivity using a powerful system. The TPU developed by Google is a processor optimized for machine learning and deep learning. TPU was also used in Google’s AI Alpha Go and Lee Se-dol’s 9-dan game in 2016.

Google is continuously introducing the next generation of TPU models, and in 2018, it has also announced the 3rd generation TPU v3. On the 18th of May, the 4th generation model, TPU v4, was announced. TPU v4 is said to have more than twice the FLOPS, which is an indicator of how many floating-point calculations can be performed per second compared to the previous generation model, TPU v3.

Also, thanks to the interconnection technology, the memory bandwidth is greatly increased, and a unit called Pod, which combines 4,096 TPU v4s, can provide 1exaFLOPS computation performance. It is said that this corresponds to a notebook processor that has reached 10 million performances.

Google CEO Sundar Pichai said that this is a historic milestone. If you wanted to get your hands on the previous exaFLOPS computing power, you had to build a custom supercomputer, but already deployed many TPU v4s. Soon, dozens of TPU v4 pods were installed in the data center. % Said they operate on energy that does not emit carbon. In late 2021, Google Cloud users are expected to use TPU v4 pods.

Meanwhile, during this event, Google announced a new search algorithm, the Multitask Unified Model (MUM).

Now, in order to search, it is essential to collect the necessary information by searching with a search engine such as Google. However, with existing search engines, the more complex the situation you want to search for, the more queries you need. Also, since search engines are not experts, it is difficult to accurately select and display the information requested by users.

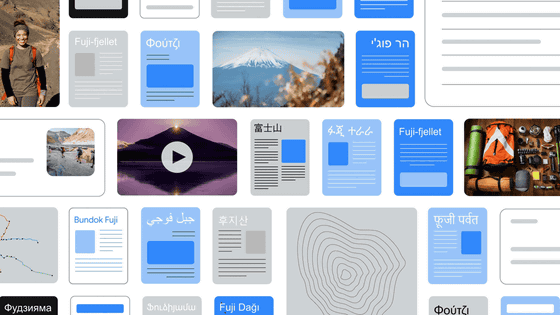

For this reason, it is MUM that Google developed. MUM is built on not only the existing natural language processing model, BERT, but also on the transformer, a neural network structure that is excellent for language comprehension tasks, and because learning takes place in 75 different languages and many tasks, it is possible to not only understand but also create languages. In addition, it will be able to understand full text or image information and process video or audio information in the future.

For example, if you want to know vaguely about Mt. Baekdu, enter it into a search engine and a related page will appear. However, if I had climbed Mount Adams before, but this time I was thinking about how to prepare to climb Mt. Baekdu, I had to search for various language combinations several times to get an accurate answer to the existing search algorithm.

However, MUM understands this question, compares Mt. Adams and Mt. Baekdu, understands that training and appropriate equipment must be purchased to prepare for climbing, so it can provide information such as the altitude of the two mountains, the weather, and what clothes are needed.

In addition, many of the Baekdusan information was organized on Korean sites, so the existing search algorithm had to search in Korean. However, since MUM gathers and presents knowledge from sources beyond the language barrier, even Americans can immediately check the scenic spots of Baekdu Mountain and nearby stores.

One of the characteristics of MUM is that it can also understand image information. For example, if you take a picture of your usual hiking boots and ask a search engine if you can climb Mt. Baekdu, you can understand the hiking boots and questions, answer that you can climb without problems, or display other recommended lists.

Currently, MUM is an experimental stage and is expected to be put into practice within a few months to a few years, and it is revealing that it is an important milestone for the future in which the Google search engine will be able to understand various communication and information interpretation naturally by people.

Google is also announcing plans to allow access to educational content in more than 100 languages from Google Lens, which searches when taken with a smartphone camera, and displays AR content in search results. In addition, during this event, the Google Map route search function was improved. Related information can be found here.

Add comment