On September 29, 2021, Google held its annual Search On event. At this event, Google revealed a new Google search function that introduces a new search algorithm, the MUM (Multitask Unified Model) multitasking unified model.

MUM, announced by Google at the developer conference Google I/O 2021 on May 19, is a machine that Transformers, a neural network structure that is good at language comprehension, understands text or image information, and in the future can also interpret voice or video. It is a learning skill.

One of the main features of MUM is the integration of image search and word search through the enhancement of Google Lens, an image recognition technology. This function, for example, allows you to check the repair method without knowing the name of the rear wheel derailleur by entering and searching for a method to fix it while taking a picture of a bicycle part.

In addition, in the lens mode of the Google Search app, you can search again for images displayed during a search and only a part of the image. This will soon be implemented in the Google Search app for iOS, but it is said that it is only available in the US. In addition, it is said that a function that can select any image or video and immediately get search results will appear in Google Chrome for PC, and it will be deployed globally.

MUM, which excels in both natural language processing and image recognition, can add more images and photos to search results. In the past, a few images were displayed at the top of the search results, so if you wanted to see more images, you had to switch to the image search tab. to be able to comprehend both text and sentences.

Google also announced About this result, which expands the provision of information about which site the link is to.

This feature was being tested as a beta version from February 2021. Previously, you could click the icon to the right of a search result link to see why the result was ranked at the top, but with this enhancement, you can view the link site name search results and articles from other sites that deal with the same topic in detail. was able to Related information can be found here.

Google has also announced that it will add a forest fire layer to view wildfire information on Google Maps, or expand the scope of its Tree Canopy Insights, which specify places where city trees are best planted.

Recently, the frequency of wildfires is increasing due to global climate change, and in September, more than 50,000 people were ordered to evacuate due to a wildfire in California, USA. In case of emergency, it is important to accurately collect forest fire information to protect yourself, so Google released a forest fire boundary map using satellite data in the United States in 2020.

Wildfire Boundary Maps allowed Americans to get an approximate picture of the size and extent of the fire, but a new Google has announced that it is putting together wildfire information and adding a new layer to Google Maps. The newly added forest fire layer marks the fire area in red on the map. Tap on the wildfires section to see detailed and up-to-date information about the fire.

You can find websites to refer to in case of an emergency, phone numbers to call for help, links to available resources provided by the local government for detailed evacuation orders, and information such as the extent of the fire and firefighting response. The forest fire layer will be deployed globally on Android from the last week of September to early October, and iOS and desktop will appear in October. In the United States, a detailed data layer will be expanded at the National Interagency Fire Center, and similar detailed data will be expanded in Australia and other countries in the coming months.

The occurrence of a heat island phenomenon, where the city temperature is higher than that in the suburbs, adversely affects low-income communities and causes many sanitation problems such as poor air quality and dehydration. Trees planted in street trees and parks are known to protect people and buildings from direct sunlight and to reduce the temperature by releasing heat along with the evaporation of moisture to prevent temperature rise, and it is important for city designers to drink water efficiently.

So, in November 2020, Google combined aerial photography with AI to launch Tree Canopy Lab-Tree Canopy Insights, a tool to help determine urban tree density, locations at high risk of rapid temperature rises, and assist with tree planting. ) was published.

Until now, Tree Canopy Labs was only available in 15 cities in the United States, but Google has announced that it will expand its offering to 100 cities around the world, including London, Sydney and Toronto.

Here’s an Address Maker app that lets you create new addresses. Many people may think that it is natural to have an address, but in the world, many people do not have a publicly registered address and live in an empty space, but the case where there is no street number is a problem in developing countries. . Without a public address, various actions such as voting in elections, applying for job openings for bank accounts, and receiving mail are restricted.

Therefore, Google has created Address Maker, an address making app, using an open source system called Plus Codes that assigns unique codes to all areas on the map. Address makers can create new addresses and assign them to maps, reducing time from years to weeks and reducing costs. Since governments and NGOs such as Gambia, Kenya, South Africa, and the United States are already using address makers, local governments and NGOs can request the use of address makers with an application form. Related information can be found here.

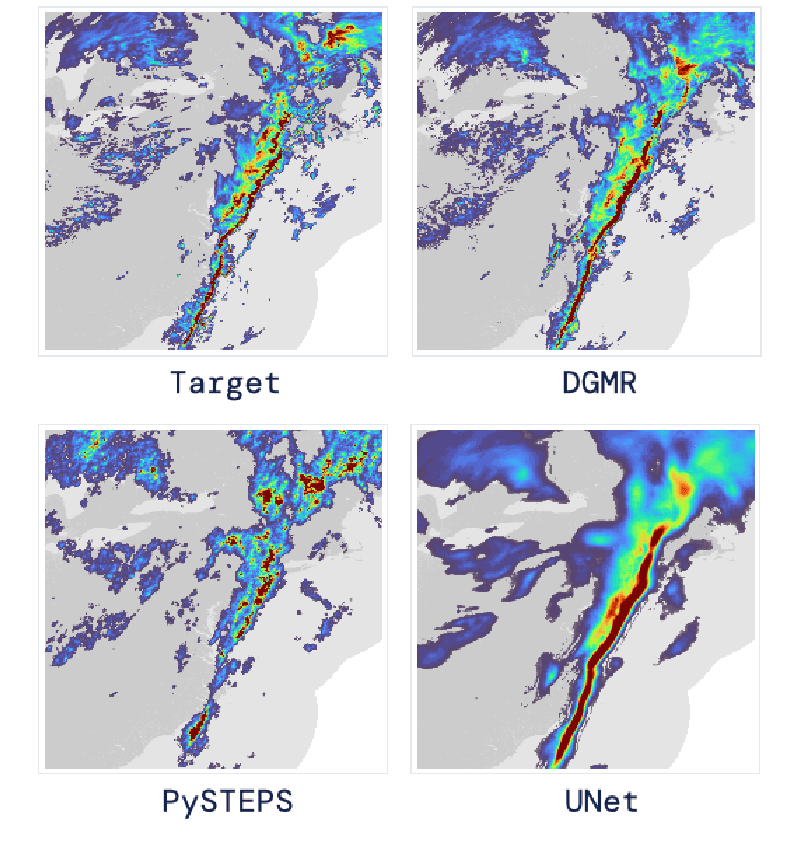

Meanwhile, DeepMind, an Alphabet subsidiary such as Google, which is well-known for analyzing protein structures through deep learning and developing Go AI, announced that it has developed DGMR, a deep generation model that can predict the probability of precipitation with high precision after 90 minutes. Predicting climate change within two hours in the weather forecast is becoming a difficult problem, so the appearance of this model is expected to greatly improve the weather forecast accuracy.

In modern weather forecasting, numerical forecasting methods are used to predict future weather by numerically calculating the movement of fluids in the atmosphere. Numerical forecasts are good at predicting the weather 6 hours ahead or 2 weeks in advance, but predicting the weather within 2 hours is less accurate.

In order to improve the so-called short-time forecast accuracy within 2 hours, DeepMind built a deep generation model DGMR and studied the motion of rain clouds captured by weather radar using algorithms such as the hostile generation network GAN used for image generation, and studied rain clouds for 5 to 90 minutes. It was designed to predict and generate motion.

To verify the accuracy of the prediction results generated by DGMR, DeepMind prepared two existing precipitation probability prediction models and asked 56 weather casters to evaluate the precision for each. As a result, 89% said that DGMR was the most accurate and useful. The DGMR is said to have a balanced precipitation probability intensity and range and is close to the actual observational record. Related information can be found here.

Soon you'll be able to use

Soon you'll be able to use

Add comment