Google introduces a new language processing technology, BERT, to its search engine to improve search results. Google is saying that the BERT adoption will be the biggest leap forward in the past five years, while the improvement that will make the biggest leap forward in search history. The introduction of BERT is first introduced by Google Search in English, but will be expanded to other languages.

BERT (Bidirectional Encoder Representations from Transformers) is a new language processing model based on machine learning. The interpretation of words according to the context, which has been a headache in previous search engines, can be performed more precisely. Google announced the BERT learning model as open source in 2018.

Interpretation of words according to context means that when there are words with multiple meanings, they look at the entire sentence including those words and select the appropriate meaning. If it is a word that has multiple meanings, of course, you cannot tell what meaning it is by looking at the word. For phrases containing these ambiguous words, BERT refers to the entire sentence and selects the meaning.

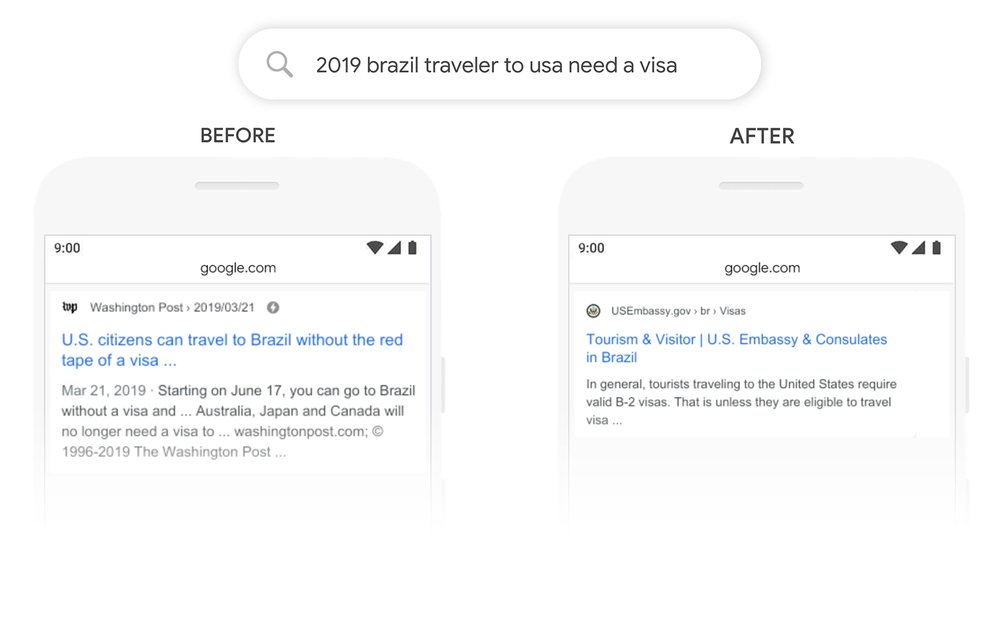

When BERT is introduced into the search, accuracy increases even if the spoken language is inserted into the search engine. Google offers a few examples in its English search engine. For example, the sentence’Can you get medicine for someone pharmacy’ can be imagined to find a way to buy medicine for the sick family and friends, not for yourself, but so far search engine algorithms say’someone’ He did not recognize that it was a method of buying medicine at a pharmacy, and indicated the interpretation result.

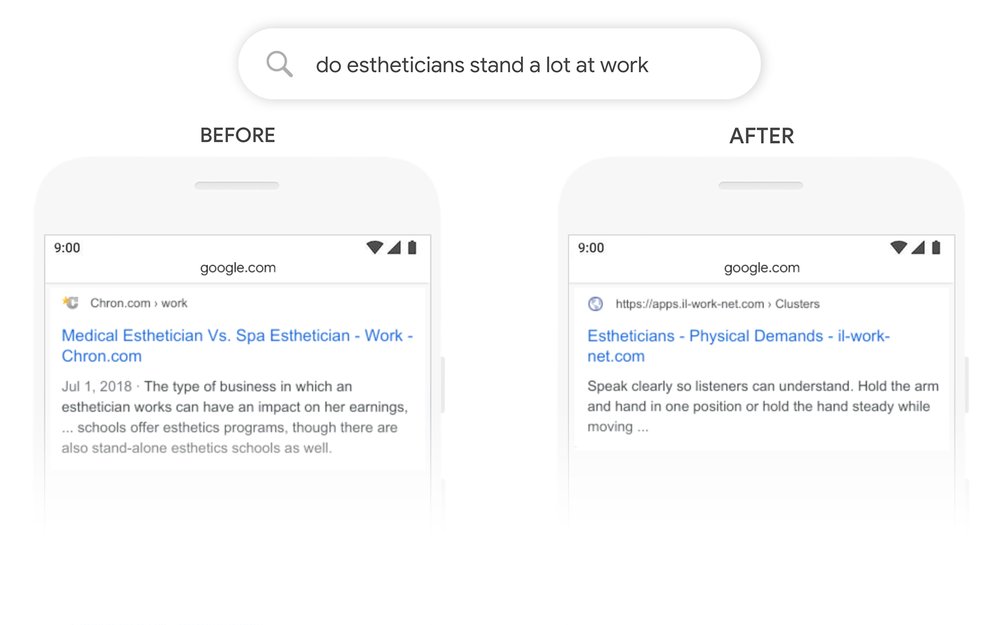

Also, in the sentence’do estheticians stand a lot at work’,’stand’ has several meanings and’at work’ is originally a slang word. Until now, it was a difficult sentence to recognize in Google search. Even with such a phrase, BERT is said to be able to display relevant results by subtracting meanings that are not necessary in the context.

On the other hand, there are some things that do not work well even if the BERT model is applied. For example, the original meaning of the sentence’what state is south of Nebraska’ is to ask for a state in the south of Nebraska, but BERT displays a small community homepage in southern Nebraska, not Kansas.

The BERT model is now only used for the English version of Google Search, but it also has interesting features for multilingual distribution. Google explains that BERT can deploy models trained in one language to other languages. As a result of experimental application of some of the English learning models, it is said that the search results in 20 languages such as Korea, Hindi, and Portuguese were significantly improved.

Google Search has dominated numerous local search markets around the world in its 15-year long history. Even now, it records billions of searches every day. Changes to these search algorithms can have a big impact on website operators and users. Meanwhile, Google rarely describes the structure publicly with the Google search algorithm black box. Of course, BERT is just one element of Google search. However, the act of Google holding a search update presentation and explaining the structure itself is a movement that has not been seen in Google until now. Google may be feeling the need to improve Google search transparency. Related information can be found here .

Add comment