In 2018, the world’s first unmanned aerial vehicle assassination plan was carried out in Venezuela, and it was confirmed that the Islamic State self-proclaimed extremist group is using a home appliance drone loaded with explosives.

Among these drones, the UN reported that a fully autonomous drone may have succeeded in detonating the target. If this is true, it is reported that it is the first case of an autonomous weapon with machine learning capabilities to kill a human.

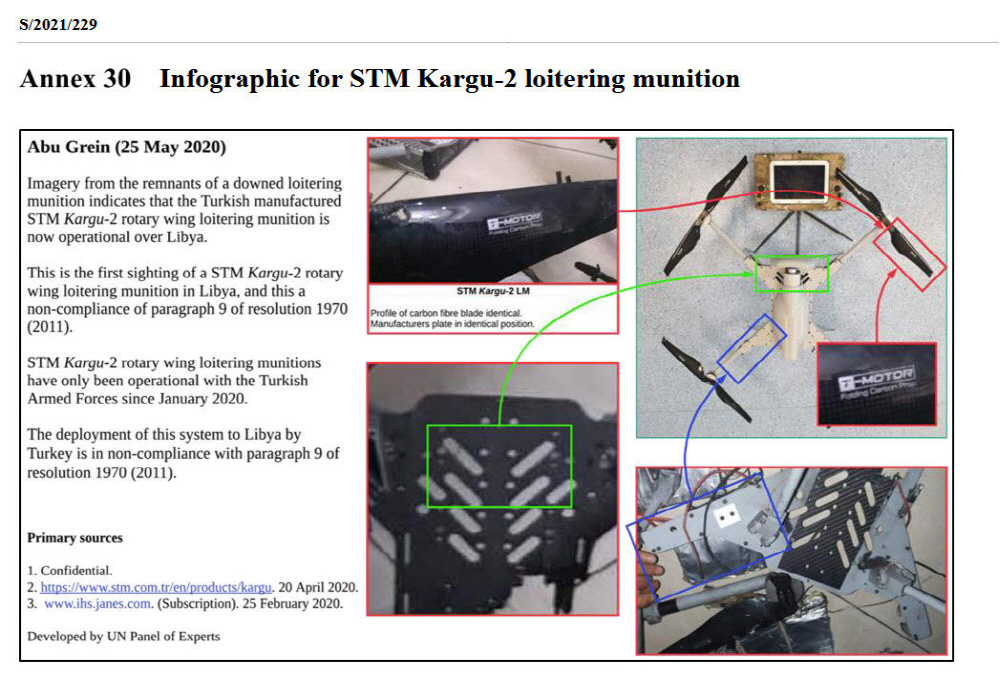

The United Nations has published the report of an expert panel established by the Security Council on the civil war in Libya in March 2021. Among them, the expert panel reported that Turkish-made Kargu-2 drones may have pursued and attacked the soldiers during the March 2020 battle.

The Kagu-2 is a quadcopter-type drone developed by STM, a Turkish military company, and is also called a kamikaze drone that performs a self-destruct attack. It is not known whether the soldier targeted for Kagu-2 was killed. However, since the expert panel is calling the drone a lethal autonomous weapon system, it is highly likely that it died.

The report states that the facial recognition system has a Kagu-2 capable of self-discovering and attacking targets, while the autonomous imaginary weapon is programmed to attack targets without the need for a pilot and data connection, with practically true launch and discovery capabilities. are evaluating

Reports say that if this is true, it is the first case of a machine-learning-based autonomous weapon being used for murder, showing that war has entered a dangerous era. In this case, experts point out that regulation on weapons equipped with AI is urgent. One expert said that it must be urgent and important to discuss the regulation of weapons equipped with AI because technology is not waiting for us. Related information can be found here.

Add comment