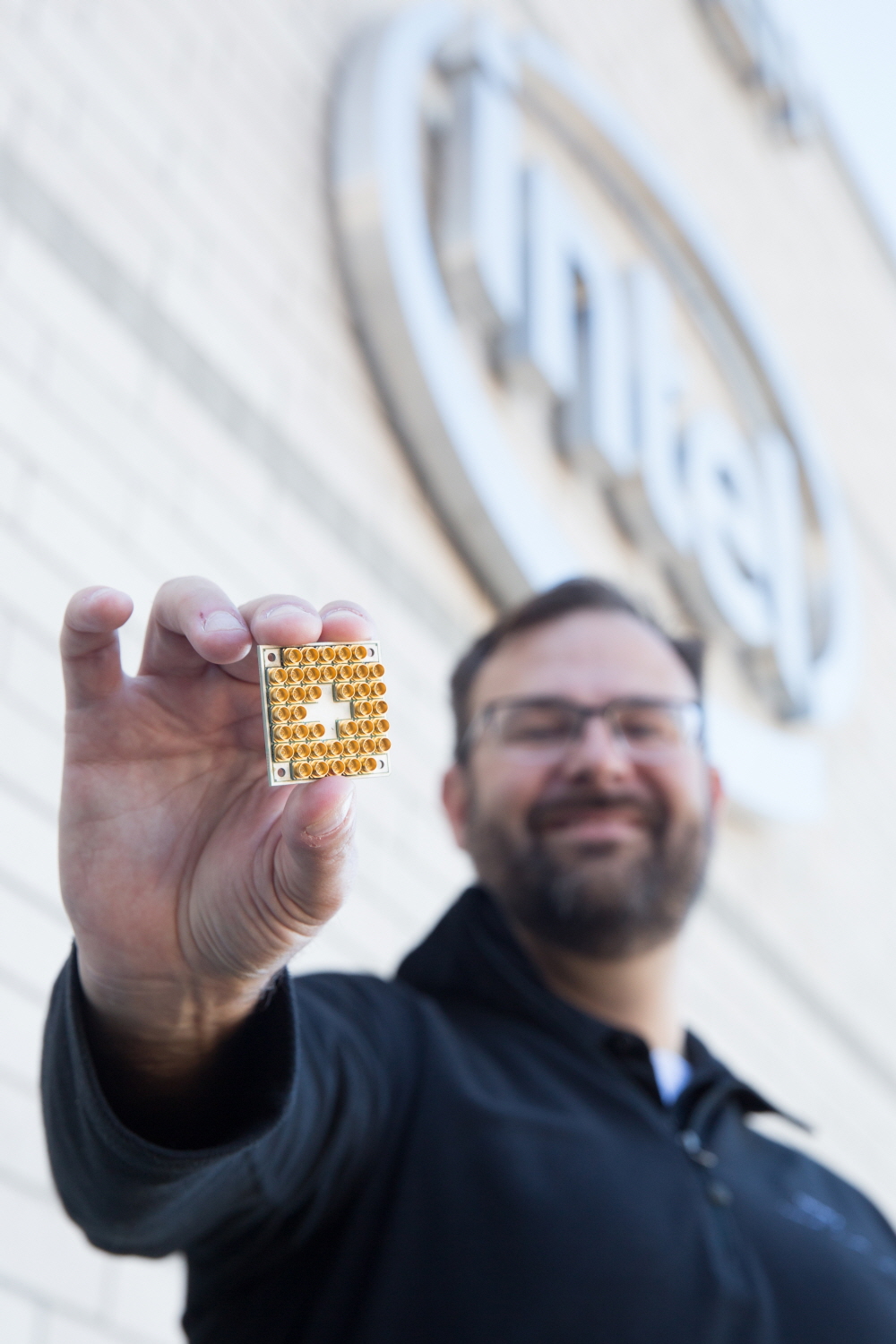

The quantum processor era opens. Google announced a quantum processor, Bristlecone, on March 5 (local time). A common PC processor has a 0 or 1 state in 1 bit. However, Bryston is equipped with 72 quantum bits that are superimposed with 0 and 1 information at the same time. Google hopes that using these quantum processors will enable computational processing to go beyond conventional computing limits.

Google has long been targeting quantum computers. Google has been developing on the basis of this quantum computer technology since its acquisition of D-Wave, and in 2014 it has been testing the performance of the D-Wave 2. At the time, however, there was no difference in performance from existing computers.

The quantum computer uses a Qubit which can have 0 and 1 at the same time. The number of qubits tells the performance of the quantum computer. However, there is no way to accurately observe the quantum bit state. To do this, we need a quantum bit to observe the quantum bit state again. For example, an RSA cryptosystem with a 2,000-bit public encryption key is said to have 100 million qubits of bits per day to decipher it with a quantum computer using the Shor algorithm. Most of the 100 million qubits are used to generate special quantum states needed for error correction as well as computational execution. To solve this problem, it is necessary to provide a large amount of qubits to reduce the quantum bit errors that may occur in the calculation processing. Bryskon aims to provide a test platform for these quantum bit error rates and performance measurements. It can also be used for quantum simulation and machine learning.

According to Google, the quantum bit error rate is 0.1% for one qubit and 0.6% for two qubits in a logic operation using 1% quantum gates in reading. Bryshon has adopted the same processing method for read and logical operations and has 72 qubits. Google says it needs 49 qubits to become a quantum supermajor, a state in which quantum computers can do computational processing that goes beyond traditional computing limits. But with Britschon’s 72 kBits, it’s likely to be scalable against future technical challenges.

Google has released BrysCon to show confidence in the future of quantum computers and quantum transcendence. However, in order to realize this kind of quantum computing in the future, it will take more time to develop the software because it requires various connections.

Although there is no controversy surrounding quantum computers, Moore’s law has been limited since 2015, so there is a limit to the technology of high-speed processing with digital data consisting of numerous 0s and 1s, like existing computers. There is a need to remember that there is.

The components of an existing computer are simple. Storage devices, arithmetic devices, and control devices. Computers have chip modules, base modules, logic gates, and transistors. Transistors are simple switches. It serves to shed or stop information. The information flowing here is well-known as a minimum unit of bits and has a value of 0 or 1. The bit can only be 0 or 1. However, there are several simple structures that can represent more complex information.

The logic gate also performs simple calculations. For example, if the AND gate is all 1, it can be sent as 1, otherwise it is a simple configuration that sends 0. However, if you combine them, you can do all the calculations that can add or multiply. From this point of view, even a high-end computer seems to be collecting 7-year-old children. But as these numbers increase, physics and complex 3D games become available.

The transistor is an electrical switch. Current, and electron motion through the switch. If the transistor fabrication process is 14nm, it is only about one-eighth the size of the HIV virus. It is about one-500th that of red blood cells. The smaller the problem, the more likely it is that the tunneling effect will cause leakage. Ultimately, the current technology will someday reach the physical limit.

Quantum computers are expected to realize different levels of processing speed by utilizing the characteristics of both 0 and 1 at the same time. Existing computers are basically a single proportion of the relationship between the number of processors and processing power that they actually handle. However, the fact that quantum computers are increasing exponentially in capacity is theoretically proven. Because of this, we expect the quantum computer to be a breakthrough that opens the next generation of technology.

For example, let’s say you are looking for a database. If you are searching for a database, search will have to refer to all the elements, so it will take time to find and display the data one by one. However, if you are a quantum computer, you can search within a square root of time. In other words, if it was a search that took a million seconds, it could end in 1,000 seconds. Likewise, it can be expected to be used in a field where a large amount of calculation is required as in a simulation.

Scientist Nature has said that in 2017, quantum computers will move from research to development starting in 2017. In fact, IBM also announced IBM Q ( http://research.ibm.com/ibm-q/ ), a service that allows users to experiment with cloud-based quantum computing in March of the same year. Intel has also said it has successfully produced prototype superconducting chips with 17 qubits in 2017.

However, as mentioned above, there are still many barriers to actual realization. Quantum computers that use 0 and 1 at the same time provide massively parallel processing using quantum superposition and quantum entanglement effects. (A quantum bit can exist in two states at one time, not one state, which is called superposition. In the case of existing computers, 4-bit information can only represent one of the 15 types, but the quantum bits can display all 16 at once, which exponentially increases as the number increases. Quantum entanglement is a phenomenon in which two qubits are separated from each other, but at the same time they are in the same state.

What matters when parallel processing with multiple qubits is kept in a quantum interference state. To calculate accurately using quantum bits it is important whether they are in a coherent state that does not interfere with each other. If you do not keep this, you will not get the answer because it affects the quantum state of each other.

So far, with current technology, this consistent state can only be sustained for as little as a second. If you look at the computing time to process more than 100 million times per second, the time of 1 second is of course quite long. But overcoming these instabilities is also the biggest challenge to the reliability of quantum computers.

In addition, there are some contradictions that can not be measured in principle from the outside. This is due to the principle characteristic that the state changes when the quantum state is measured. It is a pesky problem that there is no way to determine in principle whether it is working properly. A method designed to solve this is to multiply the quantum bits by other quantum bits to indirectly check the quantum bit state.

However, this method requires more quantum bits because it requires the use of other quantum bits to increase the reliability of complex qubits. To measure a single calculation and improve performance, it may be necessary to have a few hundred to as many as 10,000 times a qubit. Ultimately, the error rate that occurs during this process greatly limits the computing time that quantum computers can run.

Instead of fixing the error, it is also designed to prevent the error or to ignore the error effect. However, if it becomes a device that does not have an error correcting function, it may cause a problem that it can not obtain a result exceeding the existing computer. If a quantum computer is at a stage where a lot of errors occur, it can not be said to be superior to the existing computer. In addition, if the number of quantum bits increases in this process, there is a problem that the power consumed by the whole computer increases.

On the other hand, it is considered to improve the algorithm efficiency rather than solving the problem by increasing the number of qubits unconditionally. In order for a quantum computer to calculate accurately, it must finish processing while maintaining a consistent state. The important thing here is how to use algorithms to efficiently finish calculations. Anyway, it seems that it will take more time to solve these technical challenges.

With Google introducing quantum processors, there is a possibility that a new electricity of quantum computing will be opened. It can be said that there is a basis for consistently building the entire quantum computing system such as hardware and architecture for controlling quantum computing as well as software for quantum computing.

Add comment