The future of translation is artificial intelligence? With more than 100 languages in translation, Google has announced a system called GNMT (Google Neural Machine Translation) that uses artificial intelligence for natural translations in 2016, the 10th anniversary of the service.

This feature replaces the PBMT system, which is based on the phrases Google translation used to date. Instead of translating sentences mechanically into words and phrases, the entire sentence can be understood as a single translation unit. If the entire sentence is understood as a translation unit, it is easy to select from the engineering design, and the accuracy and speed of translation can be increased. At the time, Google announced that GNMT could reduce translation errors by 55 to 85 percent. Google also added that using GNMT will enable some, but human-level translations.

Another example that Google showed in this example was Chinese. In China, there are 18 million English translations a day. So turn off the Microsoft on March 14 (local time), eye skill of translating Chinese with translation technology with its own artificial intelligence developed in English is found to have reached the same level as human translation (see link: https : //blogs.microsoft.com/ai/machine-translation-news-test-set-human-parity/ ).

According to the report, it was evaluated through a test called News Test 2017 (newstest2017) to confirm the translation quality of the system developed by Microsoft, and it was announced at WMT17, a researcher conference held in autumn 2017. Microsoft also said that it used an external translation evaluator to compare the translation results with the human translations, confirming that the artificial intelligence translation is at a level comparable to that of humans in terms of accuracy and quality.

Microsoft says the result of machine translation reaching the same quality as humans will be a big milestone for the most difficult language processing job in translation.

However, there are still a lot of tasks to be done in order for actual AI translation to be realized to the same level as humans. Microsoft also says there are still a lot of left overs for real-time news translation technology. Even in general news, efforts need to be made to ensure that translation quality is at the same level. We need to provide translations in many languages that are more natural to be read and listened to.

Although the prediction that artificial intelligence will evolve and machines will work on behalf of human beings, it is worthy of reference to the results of the 2015 survey of scientists. Among the results of a survey of 352 scientists who made AI-related announcements at academic conferences, 2024 includes a machine for language interpretation. In the survey, we defined high-level machine intelligence (HLMI) as the ability of machines to handle all tasks better than humans without borrowing human hands. The survey found that the likelihood of these HLMIs reaching 50% within the next 45 years and 10% within the next nine years. Other results predict that machines will be able to drive trucks in 2027, replace salespeople in 2031, and machines capable of working as surgeons in 2053.

Anyway, even if you look at these results, it will be expected that artificial intelligence will be able to translate beyond amateur translators in the next 10 years or so. However, as Microsoft has said that there are many mountains to overcome, there is still a need for learning data that translates millions of articles to increase the translation rate through neural networks. Last year, Google announced a new technology that allows neural networks to learn translation-related learning without the need for such translations.

Traditional artificial intelligence translation should have a lot of documents that act as data. For this reason, the translation rate is higher for many languages such as English and French. On the other hand, in the case of a minor language, translation accuracy is inevitable.

Until now, if machine learning required teaching that human beings first inform artificial intelligence, Google newly announced method is different. Humans can not teach artificial intelligence, artificial intelligence makes a dictionary. There are similarities between languages, such as the fact that the words “desk” and “chair” are often used together, and it is possible to make a dictionary while mapping based on these common points. This is followed by the completion of the translation dictionary while learning and guiding several times.

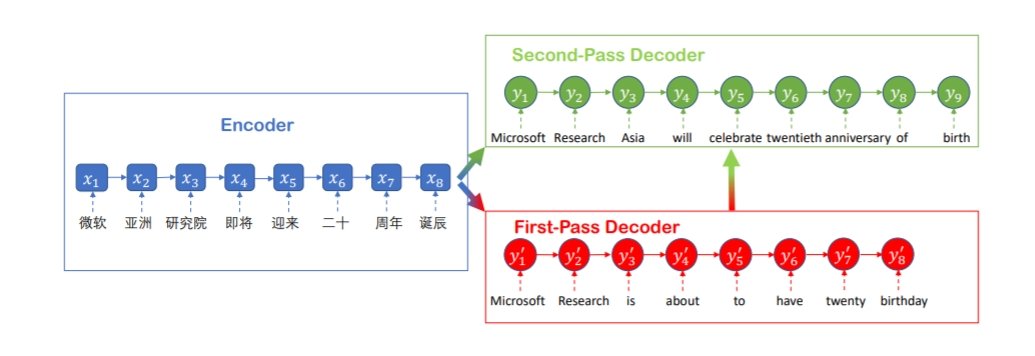

Several technical studies related to this have been published. These studies deal with reverse translation or removing noise in the process. A reverse translation translates a sentence that is roughly translated into another language into the original language. At this time, the neural network performs an adjustment process if the document to be translated does not match the original text. This way, you can see two languages in the same document.

Noise elimination is similar to translation, but when reverse translation is used to subtract or reorganize words, we try to reproduce the original sentence. This reverse translation and noise removal process allows neural networks to learn character structures deeper.

Of course, even if you use this technology, translation accuracy is not as good as Google translation. However, these technologies are likely to contribute to the long-term increase in the translation rate even without the abundant data.

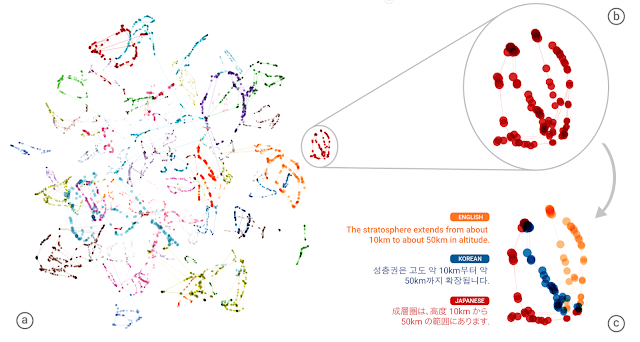

In fact, interesting results have been published between these and reverse translations. After introducing artificial intelligence into Google’s translation, such as Google’s introduction of GNMT, Zero-Shot, the ability to translate a level through language combinations that have not yet been learned, Translation) is possible.

GNMT learns translations based on structured linguistic information, which is structured by large-scale integration of natural language sentences for corpus, natural language processing research in linguistics. This translates naturally into multiple languages. When you learn this, you learn to translate from one language to another, but you are not prepared for any combination of languages you have not yet learned.

But Google has announced that artificial intelligence creates an interlingua for language processing on its own. The intermediate language is not a 1: 1 match between words, but maintains information that is close to abstract concepts. For example, this way. English and Korean translation learning. If you have learned to change English into Korean and Korean into English, then you will learn how to translate English into Japanese, and then translate again. This means that Japanese and Korean translations can be done to some extent. Even if the accuracy is low, the translational rate is shown. Even if you did not learn to translate Japanese and Korean, you can translate zero shots because of the intermediate language. This makes it possible to recognize that artificial intelligence translation using a neural network such as GNMT recognizes the meaning or concept of a word or sentence in a similar position. The ability to translate artificial intelligence is becoming much closer to the human brain than ever before.

Google also announced that it has developed a new neural network structure called Transformer, which is better than the Recurrent Neural Network (RNN), which is an excellent approach to language understanding such as language modeling, machine translation and query response. There is a bar.

RNN To begin with, a common technique used for image recognition and speech recognition is the Deep Neural Network. You can make estimates at a specific point in time, but this is not enough to understand the state of the video or understand the meaning of the voice. For this reason, an RNN capable of processing post-war time-series information has been developed.

The RNN uses the previous time zone midpoints for learning with the next time input. A network structure considering time series information. RNN is a network that maintains time series information, and DNN is a large network connecting time zones. That’s why RNN can be used for language predictions.

Transformers, Google’s neural network architecture, which is said to be better at understanding languages, says that the amount of computation required for learning is overwhelmingly smaller than other neural networks. In addition to improving computational performance and accuracy, Google says, Transformers can visualize different parts of a sentence when translating or translating certain words, and gain insights into how information travels. Transformer-based development uses a tensor flow library and can be equipped with a transformer network as a learning environment by calling a few commands.

Technological development around artificial intelligence translation is in full swing. Although it is the specific language that Microsoft has announced, it can be said that machine translation has the same quality as human translation, but it is significant. As experts predict, the era of artificial intelligence translation will open in the next 10 years, and interest will be gathered as the walls of the language will break down.

Add comment