The Cloud TPU Pod is a supercomputer that can handle TPU Pod, a system developed by Google to accelerate machine learning, on a cloud basis. Google has announced a beta version of its Cloud TPU v2 Pod and Cloud TPU v3 Pod during Google I / O 2019, its developer event. This emphasized that machine learning researchers and engineers were able to quickly train machine learning.

Machine learning requires tremendous computational processing to train the model with large amounts of data. It takes a long time to use a high-end machine for training. To solve this problem, Google is developing TPU, a silicon chip customized for machine learning. The Google Cloud Platform also offers cloud TPU (Cloud TPU), a service that leases TPU systems in the cloud.

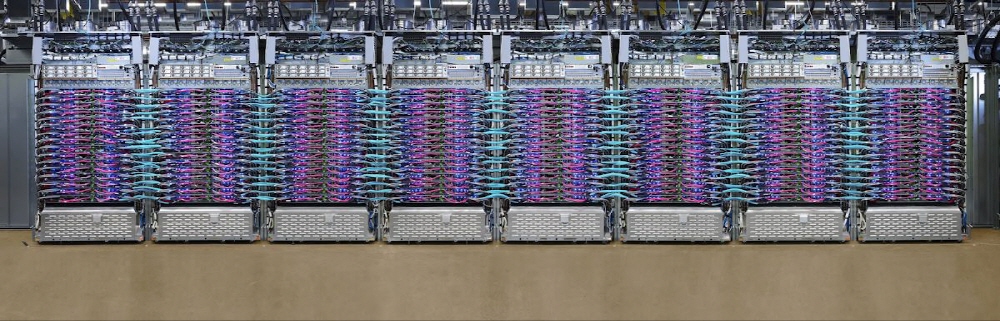

It is the TPU pot that connects these TPU chips to more than 1,000 data center networks. The cloud TPU v2 and v3 pods are cloud-based computers using the second-generation TPU and the third-generation TPU, respectively.

The Cloud v3 TPU pot using the third-generation TPU 3.0 processor is liquid-cooled to provide high performance. The product can complete ResNet-50 training with the Stanford dataset ImageNet, in just 2 minutes. While there is one feature that can only be performed on a custom silicon chip, the TPU can be fully programmed and the cloud TPU pot can be used to train a variety of machine learning models.

The cloud TPU pot is also available in a small part of the slide. The Google machine learning team is encouraging developers to use the larger cloud TPU pot slice when developing their first model in an individual cloud TPU and then expanding the training. In addition, if you want to apply for cloud TPU pot or slice to Google, you should contact Google cloud contact person using contact form. For more information, please click here .

Add comment