NVIDIA has hosted the annual GTS 2018 (GPU Technology Conference 2018) at the San Jose McEnery Convention Center in San Jose, California, March 26-29.

In his keynote speech, NVIDIA CEO Jensen Hwang announced the DGX-2, a supercomputer, and a drive simulator, Drive Constellation, that allows autonomous vehicles to travel through billions of kilometers in virtual space. Keynote images can be found here ( https://www.ustream.tv/channel/20165212 ).

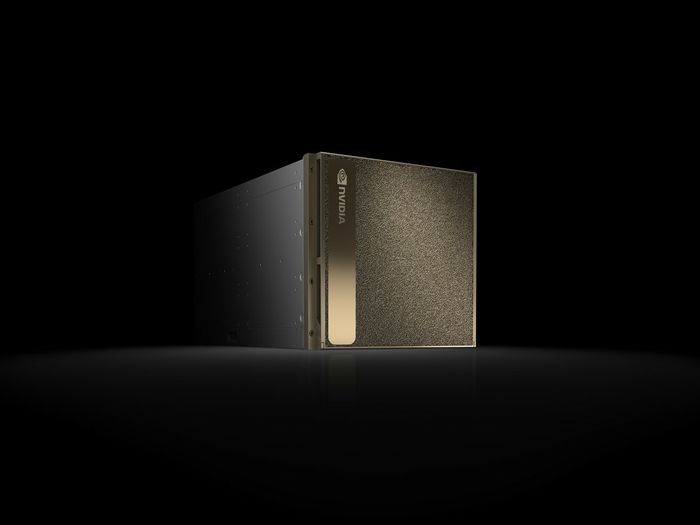

The DGX-2 is a supercomputer that uses 16 Tesla V100 32GB GPUs to connect up to 2.4 terabytes of bandwidth, and runs on the world’s largest GPU with the expression of NVIDIA. The Tesla V100 32GB GPU has increased the Tesla V100 memory capacity, which is NVIDIA’s data center GPU, to twice the previous 32GB.

The DGX-2 connects 16 Tesla V100 32GBs with 12 NV switches based on NVLink and has 512GB memory capacity. The number of Cuda cores is 81,920.

The characteristics of the DGX-2 are excellent performance ratio and power consumption performance. 2PFLOPS half-precision floating point operation performance can be expected at about 10kW power. The price of the DGX-2 is $ 399,000, but according to Jensen Huang, $ 300 million is needed to achieve the same performance on a CPU-based basis and 180kW of power consumption.

The DGX-2, on the other hand, has a deep running capability comparable to 300 servers that occupy 15 labs, while the size is one-sixth and power efficiency is up to 18 times. “NVIDIA is driving platform performance at speeds that exceed Moore’s Law,” said Jensen Hwang, who emphasized that DGX-2 and its products will be a breakthrough in many areas, including medical, transportation and scientific exploration. .

Drive Constellation is a virtual simulation system developed for testing autonomous vehicles. It simulates a virtual reality environment similar to actual reality, and allows the autonomous driving vehicle to run in a virtual environment so that the vehicle AI can be carried out even if the actual vehicle is not used. This will increase the safety of autonomous vehicles under development and provide scalability.

Drive Constellation is based on two servers. One is simulating all kinds of sensors mounted on autonomous vehicles such as cameras, riders, radar, etc. by running simulation software. The other one is an AI car computer, NVIDIA DRIVE Pegasus, that runs software for autonomous vehicles and treats them as if they were data from sensors that are actually traveling on the road.

The introduction of autonomous vehicles requires billions of kilometers of running tests and verification. This should ensure safety and reliability. Drive Constellation is a solution for this. Virtual simulations can provide billions of kilometers of scenarios and virtual tests, increasing the safety and reliability of the algorithm. It is said that testing on actual roads is beneficial both in terms of time and cost.

In this system, the GPU transfers the simulated sensor data to the drive Pegasus to generate and process it. Driving instructions in the Pegasus drive are made in a hardware-in-the-loop, such as going to the simulator. This cycle is done 30 times per second and the algorithms and software that Drive Pegasus runs will use it to validate that the simulated vehicle is working properly.

Drive simulation software creates a data stream and generates a variety of test environments ranging from unusual weather such as storms or snowstorms to sunlight over time, daytime environments with limited visibility, billions of kilometers of all terrain and roads. This allows them to test their ability to react to autonomous vehicles without putting people at risk. Drive Constellation will be available from the third quarter of this year.

NVIDIA has also announced the Quadro GV100, a graphics card that supports real-time ray tracing. Latex racing is a technology that allows the creation of a CG that is close to reality by reproducing the process and appearance of light coming into the eye with the same structure as the natural environment. Quadro GV100 is capable of real-time operation.

It has adopted NVIDIA RTX, a technology that supports real-time rendering support and Volta architecture. 5,120 Cuda cores, 640 tensor cores, and 32GB of HMB2 format memory. It offers 7.4TFLOPS in 64-bit double precision (FP64), 14.8TFLOPS in single precision (fp32) and 118.5TFLOPS in tensile performance.

Quadro GV100 can expand memory to 64GB using NVR, and it can expect collaborative design through virtual reality and improved AI rendering performance. The price is $ 8,999.

At this event, NVIDIA announced a number of alliances. The company will work with ARM, a semiconductor IP company under Softbank, to integrate the Deep Learning Accelerator (NVIDIA Deep Learning Accelerator) into the Project Trillium platform, ARM’s machine learning solution. Nvidia says it expects the Internet chip company to be able to easily deliver intelligent designs to design, low-cost intelligence, and billions of consumers.

NVIDIA has also announced the incorporation of Tensor RT (TensorRT) inference software into the Google tensor flow framework. It will expand support for AI-related frameworks to help improve the quality of deep-running, while helping to reduce server costs.

Add comment